Congestion Control Arrives in Tor 0.4.7-stable!

Tor has released 0.4.7.7, the first stable Tor release with support for congestion control. Congestion control will eliminate the speed limit of current Tor, as well as reduce latency by minimizing queue lengths at relays. It will result in significant performance improvements in Tor, as well as increased utilization of our network capacity. In order for users to experience these benefits, we need Exit relay operators to upgrade as soon as possible. This post covers a bit of congestion control history, describes technical details, and contains important information for all relay and onion service operators.

What is Congestion Control?

Congestion Control is an adaptive property of distributed networks, whereby a network and its endpoints operate such that utilization is maximized, while minimizing a constraint property, and ensuring fairness between connections. When this optimization problem is solved, the optimal outcome is that all connections transmit an equal fraction of the bandwidth of the slowest router in their shared path, for every path through the network.

TCP Congestion Control solves this optimization problem primarily by minimizing packet drops as the constraint property, effectively increasing speed until router queues overflow, and reducing speed in proportion to these drops. In TCP terminology, the congestion control optimization problem is solved by setting the Congestion Window equal to the Bandwidth-Delay Product of a path.

Some congestion control algorithms can make use of auxiliary information, such as latency, in order to anticipate congestion before the point at which queues overflow and packets drop. Notable examples are TCP Vegas, Bittorrent's LEDBAT, and Google's BBR.

Congestion Control Means a Faster Tor

While Tor uses TCP between relays, Tor was designed without any end-to-end congestion control through the network itself. Instead, it set a fixed window size of 1000 512-byte Tor cells on a circuit. In the early days of Tor, this resulted in unbearable latency caused by excessive queue delay, because these windows were much larger than each client's fair share of the Bandwidth-Delay Product on any given circuit. In the early Tor days, users could wait for up to a minute for a page load to respond. This also meant that relays used a huge amount of memory in these cases.

Once spare network capacity increased such that the spare Bandwidth-Delay Product of circuits exceeded this fixed window size of 1000 cells, overall latency improved due to lower queue delay, but throughput began to level off. Because the Bandwidth-Delay Product was artificially limited to 1000 cells, this fixed window size became a speed limit, with the property that lower-latency circuits had higher throughput than high-latency circuits, directly in proportion to their latency.

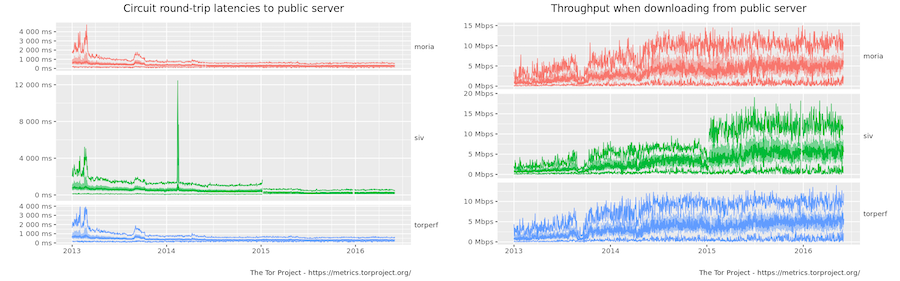

This turning point with respect to the window size happened around 2015:

When this capacity turning point was reached, congestion control became not only something that would improve latency, it would also significantly increase throughput.

This turning point made congestion control a top-priority improvement for the Tor network! Congestion control will remove this speed limit entirely, and will also reduce the impact of path latency on throughput.

History of Congestion Control Research on Tor

Unfortunately, because Tor's circuit cryptography cannot support packet drops or reordering, the research community struggled for nearly two decades to determine a way to provide congestion control on the Tor network.

Crucially, we rejected mechanisms to provide congestion control by allowing packet drops, due to the ability to introduce end-to-end side channels in the packet drop pattern.

This ultimately left only a very small class of candidate algorithms to consider: those that used Round-Trip Time to measure queue delay as a congestion signal, and those that directly measured Bandwidth-Delay Product. The up-shot is that this class of algorithms only requires clients and Exit relays and onion services to upgrade; they do not require any changes to intermediate relays.

We ultimately specified three candidate algorithms informed by prior Tor and TCP research: Tor-Westwood, Tor-Vegas, and Tor-NOLA. These algorithms are detailed in Tor Proposal 324

Tor-Westwood is based on the unnamed RTT threshold algorithm from the DefenestraTor Paper, in combination with Bandwidth-Delay Product estimation ideas from TCP Westwood.

Tor-Vegas is very closely based on TCP Vegas. TCP Vegas uses a much more fine-grained RTT ratio to directly estimate the total queue length on the path, and then targets a specific queue length as the constraint criteria. TCP Vegas is extremely efficient and effective, and is able to achieve fairness without any packet drops at all. However, it was never deployed on the Internet, because it was out-competed by the more aggressive and already deployed TCP Reno. Because Reno continues increasing speed until packet drops happen, TCP Reno would end up soaking up the capacity of less aggressive Vegas flows that did not drop packets.

The final algorithm, Tor-NOLA, was created to test the behavior of Bandwidth-Delay Product estimation used directly as the congestion window, without any adaptation.

An additional component, called Flow Control, is necessary to handle the case where an Internet destination or application is slower than Tor. We won't cover Flow Control in this post, but the interested reader can examine those details in Section 4 of Proposal 324.

Implementation, Simulation, and Deployment

We implemented all three algorithms (Tor-Westwood, Tor-Vegas, and Tor-NOLA) in Tor 0.4.7, and subjected them to extensive evaluation in the Shadow Simulator.

The end result was that Tor-Westwood and Tor-NOLA exhibited ack compression, which caused them to wildly overestimate the Bandwidth-Delay Product, which lead to runaway congestion conditions. Standard mechanisms for dealing with ack compression, such as smoothing, probing, and long-term averaging did little to address this, perhaps because of the lack of packet drops as a backstop on queue pressure. Tor-Westwood also exhibited runaway conditions due to the nature of its RTT threshold. (As an aside, Google's BBR algorithm also has these problems, and relies on packet drops as a backstop as well).

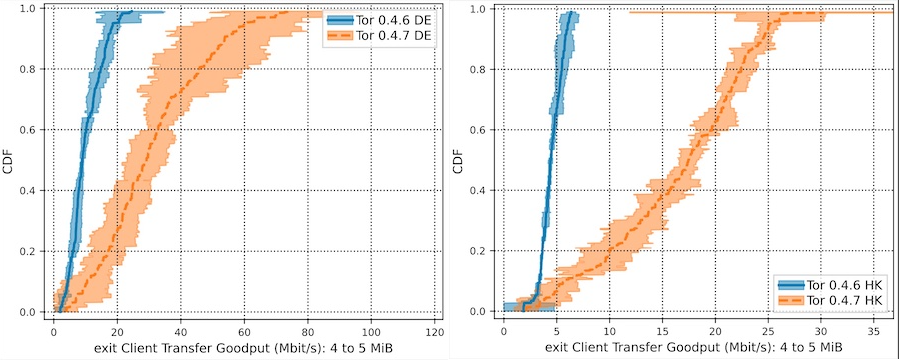

Tor-Vegas performed beautifully, almost exactly as the theory predicted. Here's the Shadow Simulator's throughput graphs of clients with simulated locations in Germany and Hong Kong:

While there is still a difference in throughput between these two locations, the speed limit from 0.4.6 Tor is clearly gone. End-to-end latency was not affected at all, according to the simulator.

Additionally, Tor-Vegas was not out-competed by legacy Tor traffic, allowing us to enable it as soon as 0.4.7 came out. We also gain protection from rogue algorithms via the combination of KIST and Circuit-EWMA, which were previously deployed on Tor to address latency problems during the BDP bottleneck era.

Exit Relay Operators: Please Upgrade!

Users of Tor versions 0.4.7 and above will experience faster performance when using Exits or Onion Services that have upgraded to 0.4.7.

This means that in order for users to see the benefits of these improvements, we need our Exit relay operators to upgrade to the new Tor 0.4.7 stable series, asap!

Packages for Debian, Ubuntu, and Fedora/CentOS/RHEL are already available. Please follow those links for instructions on using our packaging repos for those distributions, and upgrade asap!

BSD users should be able to install this release from their flavor's ports system.

If you run into problems while upgrading your relay, you can ask your questions on the public tor-relays mailing list and Relay Operator sub-category on the Tor Forum. You can also get help by joining the channel #tor-relays.

All Relay Operators: Be Prepared to Set Bandwidth Limits

Non-exit relay operators do not need to upgrade for congestion control to work, but this also means they may be surprised by the network effects of congestion control traffic running through their relays.

The faster performance and increased utilization of congestion control means that we will soon be able to use the full capacity of the Tor network. This means that all relays will soon experience new bottlenecks. Congestion control should prevent these bottlenecks from overwhelming relays completely, but this behavior may come as a surprise to operators who were used to the last several years of low CPU and bandwidth utilization.

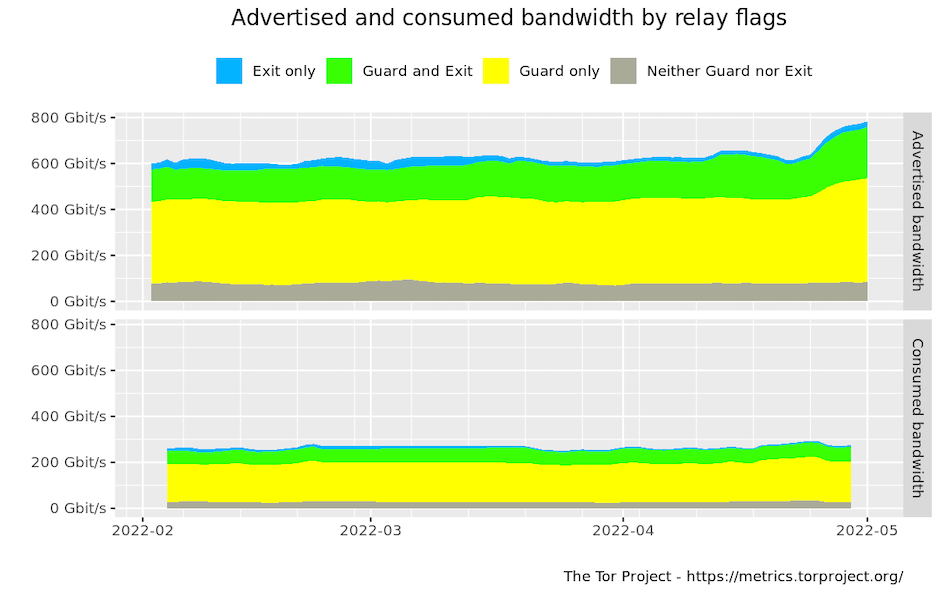

We are already seeing an increase in the Advertised Bandwidth of relays as a result of some higher-throughput congestion control circuit use, similar to our previous flooding experiments, even though most clients are not yet using congestion control:

This increase is because Advertised Bandwidth is computed from the highest 7-day burst of traffic seen, where as Consumed Bandwidth is the average byte rate. As more clients upgrade, particularly after a Tor Browser Stable release with 0.4.7 is made, the Consumed Bandwidth of the network should also rise. We expect to make this Tor Browser Stable release on May 31st, 2022.

Once users migrate to this new release, relay operators who pay for bandwidth by the gigabyte may want to consider enabling hibernation, to avoid surprise cost increases.

This increased traffic may also cause your relay CPU usage to spike, due to increased cryptographic load of the additional traffic. In theory, Tor-Vegas congestion control should treat CPU throughput bottlenecks exactly the same as bandwidth bottlenecks, and back off once CPU bottleneck causes queue delay. However, if you also pay for CPU, you may want to rate limit your relay's bandwidth.

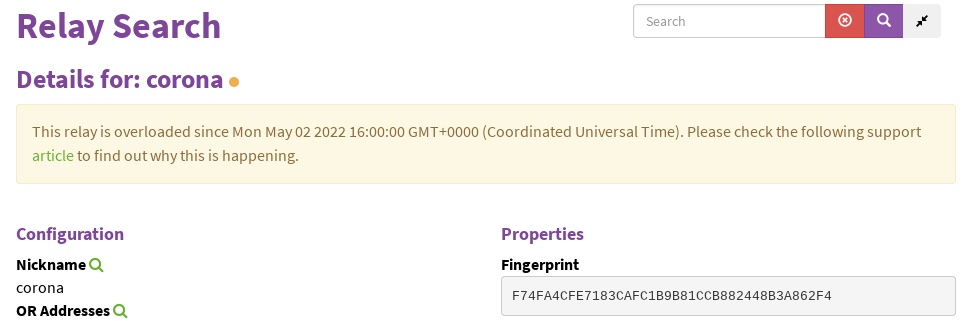

Relays may also experience overload on the Relay Search Portal. Here is an example of that:

This overload indicator may appear for several reasons. If your relay has this overload indicator, follow the instructions on our overload support page, in order to diagnose the specific cause. If the cause is CPU overload, consider setting bandwidth limits, to reduce the traffic through your relay.

If you have issues diagnosing or eliminating the cause of overload, you can ask questions on the public tor-relays mailing list and Relay Operator sub-category on the Tor Forum. You can also get help by joining the channel #tor-relays.

Onion Service Operators Should Also Upgrade

Just like Exit relays, Onion Services also need to upgrade to 0.4.7 for users to be able to use congestion control with them.

Additionally, Tor 0.4.7 has a security improvement for short-lived onion services, called Vanguards-Lite. This system will reduce the risk of attacks that can discover the Guard relay of an onion service or onion client, so long as that onion service is around for a month or less. Longer lived onion services are still encouraged to use the vanguards addon.

Deployment Plan

The Tor Browser Alpha series already supports congestion control, but it won't experience improved performance unless an 0.4.7 Exit or Onion Service is used with it.

Because our network is roughly 25% utilized, we expect that throughput may be very high for the first few users who use 0.4.7 on fast circuits with fast 0.4.7 Exits, until the point where most clients have upgraded. At that point, a new equilibrium will be reached in terms of throughput and network utilization.

For this reason, we are holding back on releasing a Tor Browser Stable with congestion control, until enough Exits have upgraded to make the experience more uniform. We hope this will happen by May 31st.

Also for this reason, we won't be upgrading our Tor performance metrics sources to 0.4.7 until enough Exits have upgraded for those measurements to be an accurate reflection of congestion control. So these improvements will not be reflected in our performance metrics until we upgrade those onionperf instances, either.

The Future

The astute reader will note that we rejected datagram transports. However, this does not mean that Tor will never carry UDP traffic. On the contrary, congestion control deployment means that queue delay and latency will be much more stable and predictable. This will enable us to carry UDP without packet drops in the network, and only drop UDP at the edges, when the congestion window becomes full. We are hopeful that this new behavior will match what existing UDP protocols expect, allowing their use over Tor.

This still leaves the problem that very slow Tor relays may become a bottleneck, prohibiting the use of interactive voice and video over UDP while using them in a circuit. To address this problem, we will be examining our Guard and Fast relay bandwidth cutoffs, to avoid giving these flags to relays that are too slow to handle multiple clients at once.

Additionally, in Tor 0.4.8, we will be implementing a traffic splitting mechanism based on a previous Tor research paper called Conflux, with improvements from recent Multipath TCP research. This system is specified in Tor Proposal 329.

Conflux has the ability to rebalance traffic over multiple paths to an Exit relay, optimizing for either throughput, or latency.

With Conflux, Exit relays will become the new the speed limit of Tor, making fast Exits more valuable than ever before!

Comments

We encourage respectful, on-topic comments. Comments that violate our Code of Conduct will be deleted. Off-topic comments may be deleted at the discretion of the moderators. Please do not comment as a way to receive support or to report bugs on a post unrelated to a release. If you are looking for support, please see our FAQ, user support forum or ways to get in touch with us.