Exploring Tor with carml

carml is a command-line, pipe-friendly tool for exploring and controlling a running Tor daemon. Most of the sub-commands will be interesting to developers and tinkerers; a few of these will be interesting to end users. This post concentrates on the developers and tinkerers.

carml is a Python program written using Twisted and my library txtorcon. If you're familiar with Python, create a new virtualenv and pip install carml. There are more verbose install instructions available. Once this works, you should be able to type carml and see the help output.

Connecting to Tor

carml works somewhat like git, in that a normal invocation is carml followed by some global options and then a sub-command with its own options. The most-useful global option is --connect <endpoint> which tells carml how to connect to the control-port. Technically this can be any Twisted client endpoint-string but for Tor will be one of tcp:<port> (or simply a port) or unix:/var/run/tor/control for a unix-socket.

For Tor Browser Bundle, use carml --connect 9151. Typically a "system" Tor is reachable at carml --connect 9051 or carml --connect unix:/var/run/tor/control. You may need to enable the control-port in the configuration and re-load (or re-start) Tor. More details are in the documentation.

Start Exploring

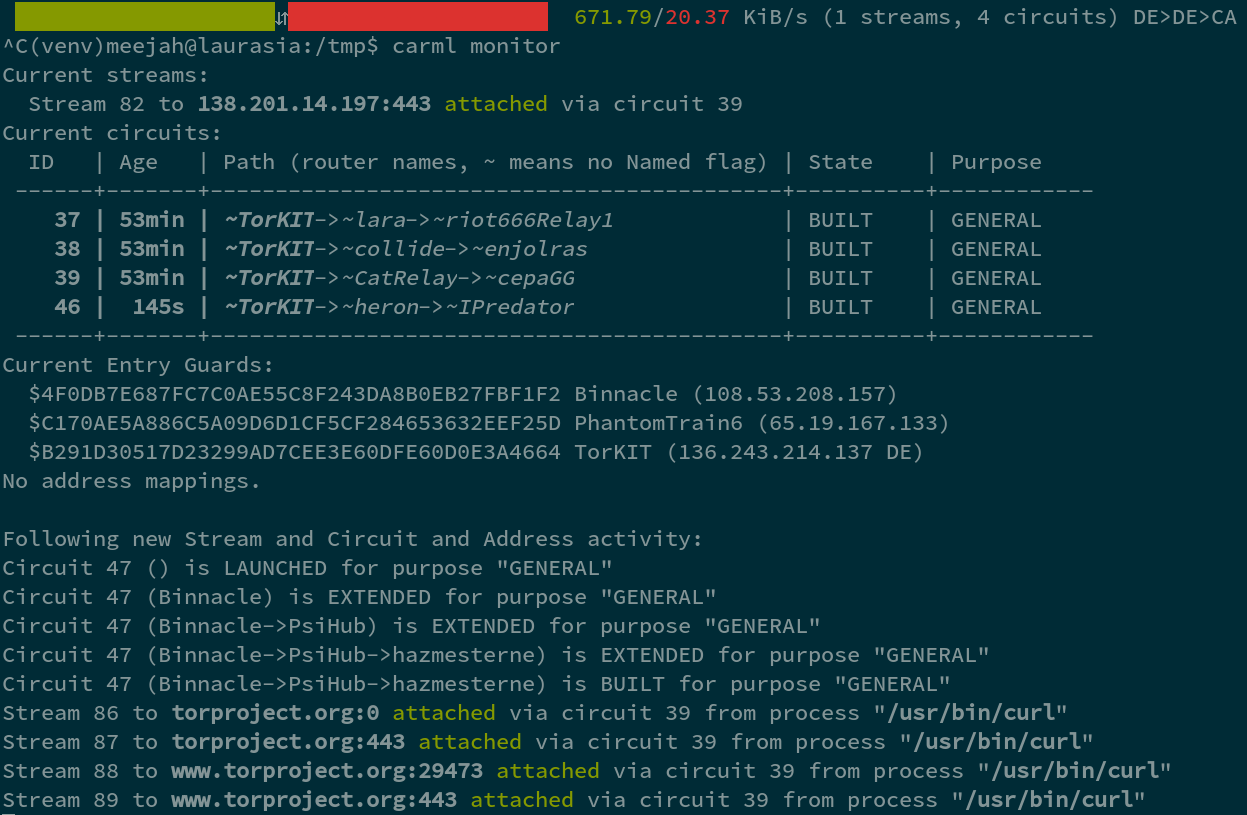

The most interesting general purpose command is probably carml monitor -- try running it for a while and you can see what your Tor client is doing. This gives some good insight into Tor behavior.

A (very basic) usage graph is available via carml graph to see what bandwidth you're using (this needs work on the scaling -- PRs welcome!)

Explicit Circuits

Sometimes, you want to use a particular circuit. For example, you're trying to confirm some possibly-nefarious activity of an Exit. We can combine the carml circ and carml stream commands:

carml circ --build "*,*,4D08D29FDE23E75493E4942BAFDFFB90430A81D2"

This means make a 3-hop circuit through any entry-guard, any middle and then one particular exit (identified by ID). You can*= identify via name (only if it's unique!) but hashes are highly recommended. Of course, you could explicitly choose the other hops as well. Note that the stars still leave the selection up to carml / txtorcon which cannot (and does not) use Tor's exact selection algorithm.

Next, you'll want to actually attach circuits to that stream. It will have printed out something like "Circuit ID 1234". Now we can use carml stream:

carml stream --attach 1234

This will cause all new streams to be attached to circuit 1234 (until we exit the carml stream command). In another terminal, try torsocks curl https://www.torproject.org to visit Tor Project's web site via your new circuit. Once you kill the above carml stream command, Tor will select circuits via its normal algorithm once again.

Note that it's not currently possible to attach streams destined for onion services (this is a Tor limitation, see connection_edge.c).

Debugging Tor

The control protocol reveals all Tor events, which includes INFO and DEBUG logging events. This allows you to easily turn on DEBUG and INFO logging via the carml events command:

carml events INFO DEBUG

This can of course be piped through grep or anything else. You can give a --count to carml events, which is useful for some of the other events.

For example, if you want to "do something" every time a new consensus document is published, you could do this:

carml events --once NEWCONSENSUS

This will wait until exactly one NEWCONSENSUS event is produced, dump the contents of it to stdout (which will be the new consensus) and exit. Using a bash script that runs the above (maybe piped to /dev/null) you can ensure a new consensus is available before continuing.

Events that Tor emits are documented in torspec section 4.1. You can use carml to list them, with carml events --list.

Another example might be that you want to ensure your relay is still listed in the consensus every hour. One way would be to schedule a cron-job shortly before the top of each hour which does something like:

carml events --once NEWCONSENSUS | grep

# log something useful if grep didn't find anything

Raw Commands

You can issue a raw control-port command to Tor via the carml cmd sub-command. This takes care of authentication, etc. and exits when the command succeeds (or errors). This can be useful to test out new commands under development etc (as the inputs / outputs are not in any way validated).

Every argument after cmd is joined back together with spaces before being sent to Tor so you don't have to quote things.

carml cmd getinfo info/names

carml cmd ADD_ONION NEW:BEST Port=1234

End-User Commands

Briefly, the commands intended to be "end-user useful" are:

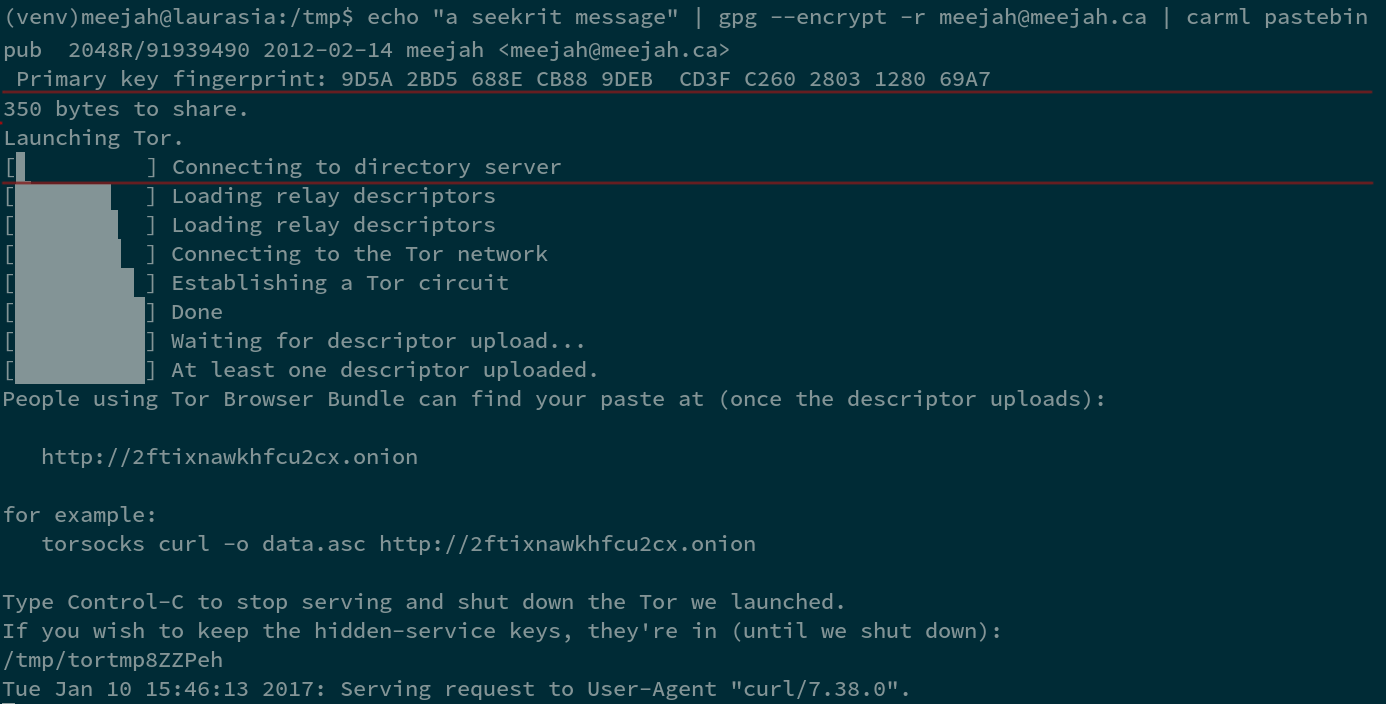

carml pastebin: create a new hidden service and serve a directory, single file, or stdin at it. You can combine with carml copybin or simply torsocks curl ... on the other side. Still an "exercise to the reader" to securely distribute the address.

carml tbb: download, verify and run a new Tor Browser Bundle. This pins the public-key of torproject.org and bundles the keys of likely suspects that sign the bundles. It is less useful now that TBB auto-updates.

carml newid: sends the NEWNYM signal, which clears the DNS cache and causes Tor to not re-use any existing circuits for new requests.

carml monitor shows you what Tor is doing currently. Similarly, carml graph shows you just the current in/out bandwidth.

Pure Entertainment

Commands that can provide hours of entertainment include:

- carml xplanet

- carml tmux

I hope you find carml useful. Suggestions, bugs, and fixes all welcome on carml's GitHub page.

See Also

There is also a curses-based Tor tool called ARM (blog post). This is being re-written as "Nyx" currently.

Comments

Please note that the comment area below has been archived.

"Suggestions, bugs, and

"Suggestions, bugs, and fixes all welcome on carml's GitHub page."

I think the link here is wrong.

Yes, you are right, thanks!

Yes, you are right, thanks! Now fixed :)

Very interesting, thanks for

Very interesting, thanks for this blog post! :)

Sounds great, but I hvae a

Sounds great, but I hvae a question about SSL for:

https://carml.readthedocs.io/en/latest/installation.html

Are you really using SSl3 DHE RSA AES 128/256 SHA? Those are deprecated, yes?

That's a third party

That's a third party service. So ask them? (https://readthedocs.org/)

For Tor, keeping files under

For Tor, keeping files under third party protection is like accepting "secure" (bugged!) office space from Google. Or even worse, a US military base. Tor Project has done the first but I hope not the second. Although I worry a lot about RU/CN intentions towards TP, one must also worry even more about USG intentions towards TP.

I use ReadTheDocs because it

I use ReadTheDocs because it saves me a ton of time self-hosting documentation.

If you're worried about trusting ReadTheDocs, you have two options: you can build the docs yourself (type

make htmlin thedocs/directory) or you can use the hidden-service hosted docs (although they tend to be "not as up-to-date" as the ReadTheDocs ones -- typically they get updated when I do a release but don't track master like ReadTheDocs do).ReadTheDocs has no more authority over the Git repository than you do (i.e. they have read-only clone access).

"or you can use the

"or you can use the hidden-service hosted docs"

Any reason you did not include the .onion link?

I understand, but this habit

I understand, but this habit (not only yours!) worries me since it means devs are not using the tools we have to provide authentication/security.

Cool project, BTW!

Thanks for replying.

I can't tell if you mean the

I can't tell if you mean the "habit of not using HTTPS" or the "habit of using Web services run by others" ... in either case you should be getting the actual software from Git (which has signed tags) or from PyPI (which uses HTTPS and has signatures). I agree it would be nice if ReadTheDocs used TLS.

I was thinking of another project (txtorcon) when talking about the hidden-service hosted docs. Sorry about that!

However, this spurred me to turn on a hidden-service for carml as well, and it is now at: carmlion6vt4az2q.onion/.

Thanks for replying! The new

Thanks for replying!

The new onion appears not to use TLS and I am not sure how to attempt to verify its authenticity beyond the fact that someone appears (to my Tor Browser) to have posted a link in what appears (to my Tor Browser) to be a Tor Project blog.

Not trying to be difficult--- I really don't understand how to obtain a verified version of the code. Ideally GPG signed using a key signed using other keys I know (Web of Trust).

No, it does not use TLS and

No, it does not use TLS and never will. It's already encrypted (via Tor) and is a self-certifying URL. You'll find I edited the URL into the GitHub page and it's on my Web site.

So that's already 3 accounts someone would have to compromise to feed you an invalid onion address...the next release will have it in the README, and then you'll also have a signed tag. And all that just to feed you fake documentation (no actual software is on ReadTheDocs nor the onion documentation site).

OK, clearly I don't yet

OK, clearly I don't yet understand how this works.

Can you clarify "self-certifying URL"? How does the URL certify itself?

What about an onion address given somewere on the open web which claims to be the address of a SecureDrop site run by some reputable journalism outfit (rather than some nasty spying outfit claiming to be the reputable journalism outfit)?

With a TLS certificate, you

With a TLS certificate, you have to trust "the Certificate Authority infrastructure" to be sure you're connecting to the intended target. An onion address is derived from the public half of the keypair that "owns" the service so you can be sure that you're connecting to the intended target as long as you're sure you've got the correct .onion address. You can read more on Tor's page about hidden services (note that these are usually referred to as "onion services" now).

Of course, this doesn't magically solve the problem of securely distributing the addresses. You still have to be convinced that the

.onionaddress you've received is the correct one!Also for completeness: if the operator of the onion service has lost control of their private key, then anyone can "impersonate" that service (just like if you lost the private key corresponding to a TLS certificate).

Thanks for replying and for

Thanks for replying and for not crying "OT" since I need to make some decisions ASAP.

> With a TLS certificate, you have to trust "the Certificate Authority infrastructure" to be sure you're connecting to the intended target.

Yes, the trouble is that it seems we need to fear that all kinds of governments may force CAs to collaborate in evil tricks.

> An onion address is derived from the public half of the keypair that "owns" the service so you can be sure that you're connecting to the intended target as long as you're sure you've got the correct .onion address.

That's good to know, thanks.

Any advice for how to verify that I do have the right onion address? For example, are these correct? (They are published at https web pages, but there remains some doubt that my Tor Browser may have been directed to a phishing site, or that the links might have been maliciously altered by intruders.)

Intercept-Secure-Drop

http://y6xjgkgwj47us5ca.onion/

Propublica Secure Drop site

http://pubdrop4dw6rk3aq.onion/

The Intercept has a cert which at least names theintercept.com. But Propublica appears to use a cert which names Fastly.

> You can read more on Tor's page about hidden services (note that these are usually referred to as "onion services" now).

Saved the link, at first glance didn't find the information I need ASAP.

> if the operator of the onion service has lost control of their private key, then anyone can "impersonate" that service (just like if you lost the private key corresponding to a TLS certificate).

And it seems that many keys attributed to reporters at The Intercept were revoked. The ones which appear to still be valid appear not to be signed by any other keys, making it impossible to try to use the Web of Trust.

Any advice on how to authenticate that one is really dropping to a given reporter?

Speaking of mystery root

Speaking of mystery root certificates, in case anyone else is wondering about the root certs added to Firefox ESR (and thus TB, I think):

ISRG Root X1 is apparently not ISRG, the USAF cyberwar outfit, but Internet Security Research Group, which maintains LetsEncrypt, but I have been unable to verify this.

SZAFIR Root CA2 is apparently a Hungarian CA.

No idea about the others.

I believe the removed certs reflect Microsoft, Mozilla, etc, exacting "tech governance" for unforgiveable security breaches by some incompetent CAs.

Ordinary citizens need good sources of information about these actions.

In general you need to get

In general you need to get the information from some trusted source.

Absent that, you can get the same information from multiple sources to become "more sure" it's accurate. Obviously, this isn't foolproof but if you get the same key for, e.g., Micah Lee from multiple different sources and they all have the same fingerprint either your adversary has compromised *all* your sources at the right moment or you've gotten a legitimate key.

For whatever it's worth, I have

927F 419D 7EC8 2C2F 149C 1BD1 403C 2657 CD99 4F73for Micah Lee's key 0x403C2657CD994F73For SecureDrop specifically, there's also https://securedrop.org/directory.

>Or even worse, a US

>Or even worse, a US military base.

What's wrong with US military base? I think that military base is more secure than a civil facility because if it was bugged, it will harm base's owner, so it is checked for bugs. Own bugs can be reused by foreign spuies, so no own bugs, they have enough living people there for surveillance. TorProject source codes are open, there is no harm if anyone saw it. The main concern are backdoors. But if US wanted backdoors in TP, they would be already there. They can secretly tamper with your hardware. But they don't. It's more profitable to pay universities for hacking your Tor, since Russia, China, North Korea, Iran, Syria, Nigeria and Cuba are not as advanced and just can't do the same.

> TorProject source codes

> TorProject source codes are open, there is no harm if anyone saw it.

The concern is not about public information such as open source code. Obviously. Like any organization, Tor Project also handles matters which are best kept private, if possible, such as internal employee matters, political strategies, communications with journalists, etc.

> What's wrong with US military base? I think that military base is more secure than a civil facility because if it was bugged, it will harm base's owner, so it is checked for bugs.

Seriously? Really? It never occurred to you that USG is likely to bug any non-military "guest" resident on base? You really don't know that US military bases often host MI (Military Intelligence) units whose members often have had several 3-6 week courses in such topics as electronic espionage, advanced lock-picking, and worse?

> since Russia, China, North Korea, Iran

Seriously? You believe that Russian and Chinese cyberspies are not as "advanced" as any other well-funded cyberspies?

I noticed I more often

I noticed I more often receive an error with the ssl certificates, when I switch the circuit the warning disappers

Getting TLS certificate

Getting TLS certificate warnings from one circuit but not another could indicate something fishy going on with an exit-node. You can use

carmlto see which exit you're currently using (with, e.g.,carml monitor). If you do believe you've got some good evidence that an exit is misbehaving, you can alert the Tor project via one of their contact methods.Why doesn't Tor Project run

Why doesn't Tor Project run a Secure Drop site where users can anonymously report suspeicious incidents? You might follow Tails example by providing a form to help to ensure that reporting users attempt to provide the same information as other reports.