New low cost traffic analysis attacks and mitigations

Recently, Tobias Pulls and Rasmus Dahlberg published a paper entitled Website Fingerprinting with Website Oracles.

"Website fingerprinting" is a category of attack where an adversary observes a user's encrypted data traffic, and uses traffic timing and quantity to guess what website that user is visiting. In this attack, the adversary has a database of web pages, and regularly downloads all of them in order to record their traffic timing and quantity characteristics, for comparison against encrypted traffic, to find potential target matches.

Practical website traffic fingerprinting attacks against the live Tor network have been limited by the sheer quantity and variety of all kinds (and combinations) of traffic that the Tor network carries. The paper reviews some of these practical difficulties in sections 2.4 and 7.3.

However, if specific types of traffic can be isolated, such as through onion service circuit setup fingerprinting, the attack seems more practical. This is why we recently deployed cover traffic to obscure client side onion service circuit setup.

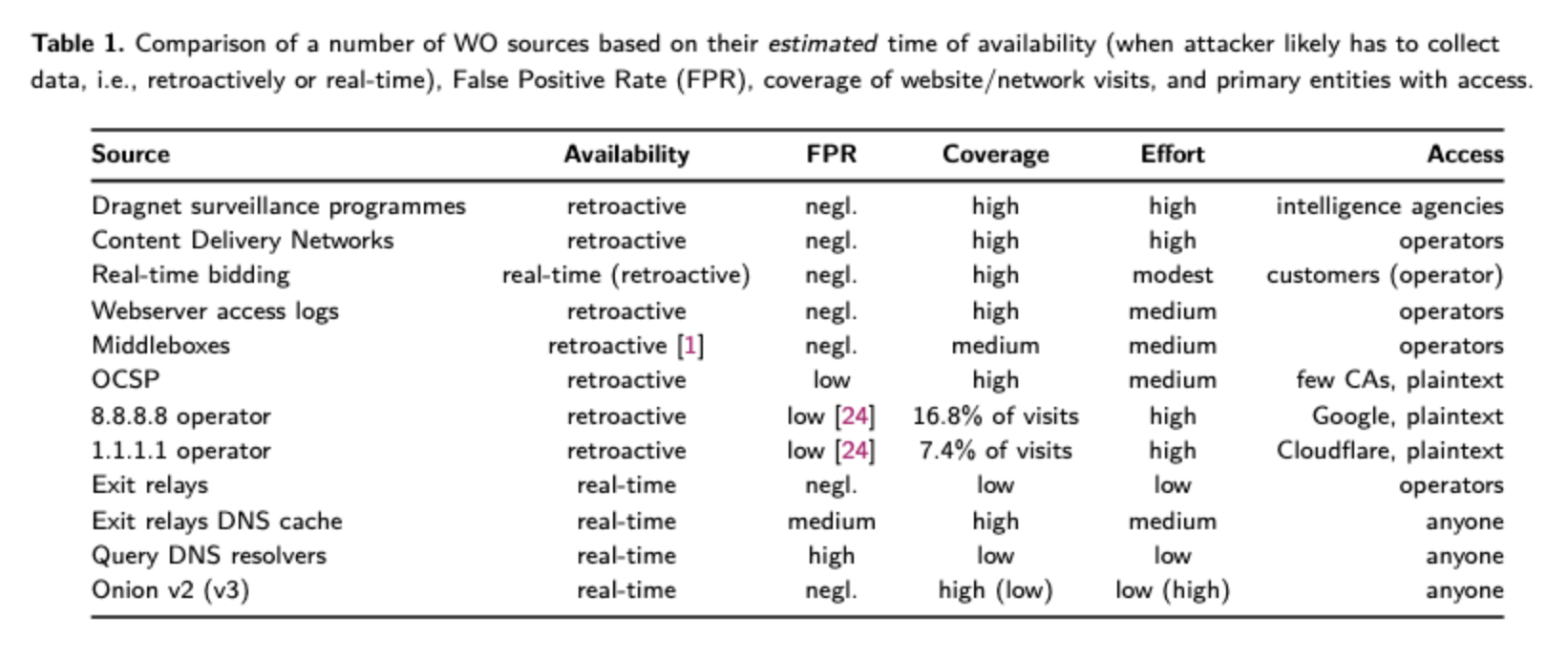

To address the problem of practicality against the entire Internet, this paper uses various kinds of public Internet infrastructure as side channels to narrow the set of websites and website visit times that an adversary has to consider. This allows the attacker to add confidence to their classifier's guesses, and rule out false positives, for low cost. The paper calls these side channels "Website Oracles".

As this table illustrates, several of these Website Oracles are low-cost/low-effort and have high coverage. We're particularly concerned with DNS, Real Time Bidding, and OCSP.

All of these oracles matter to varying degrees for non-Tor Internet users too, particularly in instances of centralized plaintext services. Because both DNS and OCSP are in plaintext, and because it is common practice for DNS to be centralized to public resolvers, and because OCSP queries are already centralized to the browser CAs, DNS and OCSP are good collection points to get website visit activity for large numbers of Internet users, not just Tor users.

Real Time Bidding ad networks are also a vector that Mozilla and EFF should be concerned about for non-Tor users, as they leak even more information about non-Tor users to ad network customers. Advertisers need not even pay anything or serve any ads to get information about all users who visit all sites that use the RTB ad network. On these bidding networks, visitor information is freely handed out to help ad buyers decide which users/visits they want to serve ads to. Nothing prevents advertisers from retaining this information for their own purposes, which also enables them to mount attacks, such as the one Tobias and Rasmus studied.

In terms of mitigating the use of these vectors in attacks against Tor, here's our recommendations for various groups in our community:

- Users: Do multiple things at once with your Tor client

- Exit relay Operators: Run a local resolver; stay up to date with Tor releases

- Mozilla/EFF/AdBlocker makers: Investigate Real Time Bidding ad networks

- Website Operators: Use v3 Onion Services

- Researchers: Study Cover Traffic Defenses

Because Tor uses encrypted TLS connections to carry multiple circuits, an adversary that externally observes Tor client traffic to a Tor Guard node will have a significantly harder time performing classification if that Tor client is doing multiple things at the same time. This was studied in section 6.3 of this paper by Tao Wang and Ian Goldberg. A similar argument can be made for mixing your client traffic with your own Tor Relay or Tor Bridge that you run, but that is very tricky to do correctly for it to actually help.

Exit relay operators should follow our recommendations for DNS. Specificially: avoid public DNS resolvers like 1.1.1.1 and 8.8.8.8 as they can be easily monitored and have unknown/unverifiable log retention policies. This also means don't use public centralized DNS-Over-HTTPS resolvers, either (sadly). Additionally, we will be working on improvements to the DNS cache in Tor via ticket 32678. When those improvements are implemented, DNS caching on your local resolver should be disabled, in favor of Tor's DNS cache.

The ability of customers of Real Time Bidding ad networks to get so much information about website visit activity of regular users without even paying to run ads should be a concern of all Internet users, not just Tor users. Some Real Time Bidding networks perform some data minimization and blinding, but it is not clear which ones do this, and to what degree. Any that perform insufficient data minimization should be shamed and added to bad actor block lists. For us, anything that informs all bidders that a visit is from Tor *before* they win the bid (e.g., by giving out distinct browser fingerprints that can be tied to Tor Browser or IP addresses that can be associated with exit relays) is leaking too much information.

The Tor Project would participate in an adblocker campaign that specifically targets bad actors such as cryptominers, fingerprinters, and Real Time Bidding ad networks that perform little or no data minimization to bidders. We will not deploy general purpose ad blocking, though. Even for obvious ad networks that set visible cookies, coverage is 80% at best and often much lower. We need to specifically target widely-used Real Time Bidding ad networks for this to be effective.

If you run a sensitive website, hosting it as a v3 onion service is your best option. v2 onion services have their own Website Oracle that was mitigated by the v3 design. If you must also maintain a clear web presence, staple OCSP, avoid Real Time Bidding ad networks, and avoid using large-scale CDNs with log retention policies that you do not directly control. For all services and third party content elements on your site, you should ensure there is no IP address retention, and no high-resolution timing information retention (log timestamps should be truncated at the minute, hour, or day; which level depends on your visitor frequency).

We welcome and encourage research into cover traffic defenses for the general problem of Website Traffic Fingerprinting. We encourage researchers to review the circuit padding framework documentation and use it to develop novel defenses that can be easily deployed in Tor.

Comments

Please note that the comment area below has been archived.

> should truncated *should…

> should truncated

*should be truncated

I am guessing that what went…

I am guessing that what went wrong here is that Mike Perry ran a spellchecker, but this software failed to notice a syntactical error (the original post inadvertently omitted a word which is needed for correct grammar, rather than containing the kind of spelling error which is easily caught by widely available spellcheckers).

I see a metaphor between the relationship

spellcheckers : reproducible builds and "public inspection" of code from torproject.org

to

syntactical-errors : "upstream" provided software which is necessary for using Tor but which is not validated by reproducible builds and possibly not even by public inspection of open source code.

Unlike the minor error in Mike's post, a hard to spot flaw in a pseudo-random number generator could have devastating consequences for millions of endangered persons.

In other words, while reproducible builds is a huge advance, it may not protect us from subtle flaw introduced (and possibly even covertly mandated by the USG, not an organization to which it is easy for anyone anywhere to just say "no") into "upstream" code, much less hardware such as the USB controllers or hard disk controllers which are needed to operate the laptop on which I am writing this post.

Like it or not, we are all participants in an arms race: all the ordinary citizens versus all the governments and all the mega-corporations which are such overeager participants in "surveillance capitalism".

To Developers, all users…

To Developers,

all users using tor must have a bot which opens random sites, so bot makes it harder for government to find the request was sent by a human or bot

I guess it would be fairly…

I guess it would be fairly easy to add some such capability to the start_tor_browser script. The question is whether it would actually help keep users safer, or would just clog the Tor network without actually helping most users.

Another idea the be awesome…

Another idea the be awesome with bot is to do it at random time that does just that in background. Make it act interactive on every website. Have a feature with internet bandwidth requirement for bot that make it look like your watching a video or downloading a file. Set them to close at random time or when there a lag as well.

A new blog post by Mike…

A new blog post by Mike Perry *_*

thanks for making my day, we really missed your blog posts Mike

Is bitmessage secure with…

Is bitmessage secure with tor with OnionTrafficOnly?

How to create good noise traffic in tor with OnionTrafficOnly?

> How to create good noise…

> How to create good noise traffic in tor with OnionTrafficOnly?

I also want to know this, explained in terms an ordinary Tor user who is not a coder can pursue.

avoid using large-scale CDNs…

Tor Browser should consider including Decentraleyes. And also just want to note that adblocker coverage (f.ex. with uBlock Origin) is better than it was in 2012.

This post is devastatingly…

This post is devastatingly sophisticated. I love it. More like this, please. But not a plurality; the blog has to be approachable for general audiences.

I too loved the post and…

I too loved the post and share everyone's gratitude for Mike Perry's work for TP, especially his role in developing Tor Browser, the Best-Thing-Yet for ordinary citizens. I'd also love to see more posts covering technical issues (the state of crypto in Tor vis a via Quantum Cryptanalysis, for example), but agree that we need a mix of posts readable by prospective new users and prospective new Tor node operators, which ideally will inspire people considering trying Tor to follow through by adopting Tor for daily use.

Lots of work has gone into…

Lots of work has gone into padding established connections, but what about an observer forcibly interrupting traffic to record timing or errors of disconnections? It occurred to me as I thought about how an external observer might be able to locate a Tor user who logs into ProtonMail, Tutanota, or a chat service and sends messages, for instance. I've lived in places where a momentary cut to the internet regularly correlated before hearing a police siren or helicopter.

@ MP: it is very good to see…

@ newcomers to this blog: Mike Perry led the coders who developed Tor Browser, which was probably the single most important product yet developed by Tor Project (after Tor itself of course).

@ MP: it is very good to see you are still hard at work keeping Tor safer!

> Users: Do multiple things at once with your Tor client

Can you describe in more detail?

Many users presumably use Tor circuits mostly for either

o browsing the internet with Tor Browser, reading pages, downloading/uploading files etc

o obtaining updates for their Debian system using the onion mirrors

I take it keeping multiple tabs open to various websites in Tor Browser is not enough?

I have tried to argue that OnionShare is potentially an easy, practical, and immediately available solution to the ever increasing problem of breaches of sensitive personal information including voter records, medical records, financial transactions, educational records, travel records, and so on and so forth. Further, the threat landscape is ever expanding. In particular, multiple news stories over the past few years have confirmed in detail the warnings some posters made in comments to this blog over the past decade, that all ambitious national intelligence services increasingly have developed the means, motive, and opportunity for attacking large classes of ordinary citizens, seeking to mimic Keith Alexander's infamous injunction to US spies: collect everything about everyone. Begin by targeting telecoms, government and mega-corporation contractors and leapfrog from there. That is how NSA was doing in around 2005-2012, as verified in detail in the Snowden leaks. And to a great extent, whether they work for the government of FVEY nations, for Russia, for China, for Iran, all spooks think alike.

Because there is already a huge infrastructure devoted to collecting the "data exhaust" of every human living or dead, it seems reasonable to speculate that growing a sufficient privacy industry to enable ordinary citizens who use Tor Browser to also use OnionShare for many mandatory instances of sharing personally identifiable information, for the dual purposes of

o obstructing the spooks and behavioral advertisers/real-time-bidders who wish to spy on this sensitive information,

o diversifying the content of the content carried by their Tor circuits.

Any thoughts regarding this suggestion?

Could OnionShare possibly play a role in making name resolution harder for spooks and other baddies to exploit in order to attack ordinary citizens?

> Website Operators: Use v3…

> Website Operators: Use v3 Onion Services

Ideally, there would be a single frequently updated HowTo for new (and experienced) node operators which explains all these things in detail, following the very simple and smart rule used by Tails Project in their documentation:

o explain the most essential points first, in the simplest possible terms

o congragulate the new operator on helping themselves and everyone in the world by running a Tor node.

o "Now, to make your node more resistant to various kinds of attacks on your users"

o detailed instructions in one place for the extra steps mentioned in the blog post above.

I believe that such a document could be useful both in helping potential new operators to understand quickly that they can absolutely get a node up and running with little effort, and in helping them to improve their node once basic maintainance has become second nature for them.

> Users: Do multiple things…

> Users: Do multiple things at once with your Tor client

I have a suggestion which I hope could greatly help both developers of open source software and also help diversify the content of Tor circuits at the user level:

Developers rely upon bug reports which are sometimes provided automatically (hopefully only if the user agrees). I have long advocated making all such auto-reporting strongly anonymized and strongly encrypted. Tails does this using Whisperback, but possibly OnionShare could also be used.

A related example: Debian's venerable "popcon" should be strongly anonymized and strongly encrypted but it has never been either of those things. Which means that privacy software takes a hit because Debian users concerned with privacy are hardly likely to enable popcon, which means that usage of privacy tools is severely under-counted. (The Snowden leaks confirmed in details warnings that NSA and other bad actors routinely exploit bug reports and usage reports to target victims. To state the obvious: knowing that a target (say the personal PC of a telecom engineer or government official) is running a no longer supported version of Windows 7 [looking at Putin] or that a targeted server is running a version of Azure which has an exploitable zero-day, is just what the cyberwarrior needs to start his or her attack.

Another example: the spell-checker in gedit could use some serious updating to include "novel" terms which posters are likely to use but which are too culturally recent to yet appear in standard word lists. What is needed is automatic reporting to the spell-checker developer (if the user is willing to trust them) with words the current version didn't recognize. Again, to state the obvious: this kind of information is invaluable to cyberspooks who want to use stylometry to near-uniquely identify an "anonymous" writer, so is requires very strong protection.

What I propose is that TP try to develop a torified open-source free tool which is to automatic-bug/usage-reporting what Tor Browser is to web-browsing, which makes it easy for users to check developer web sites they are willing to trust with strongly encrypted and anonymized usage/error reports.

Most websites get some…

Most websites get some contents from google.com on the fly, if I browse two of these websites at the same time and in different tab, do I share the same IP address with google?

Tor Browser should block…

Tor Browser should block recaptcha spyware. It is able to uniquely fingerprint most machines. Is extremely obfuscated with virtual machine but can unravel. In some days I am going to request audio captcha from each exit node which will cause recaptcha denial of service for each Tor user to raise awareness.

WARNING: SHA1 is fully…

WARNING: SHA1 is fully broken!

https://eprint.iacr.org/2020/014.pdf

https://sha-mbles.github.io/

https://arstechnica.com/information-technology/2020/01/pgp-keys-softwar…

"Behold: the world's first known chosen-prefix collision of widely used hash function.

The new collision gives attackers more options and flexibility than were available with the previous technique. It makes it practical to create PGP encryption keys that, when digitally signed using SHA1 algorithm, impersonate a chosen target. More generally, it produces the same hash for two or more attacker-chosen inputs by appending data to each of them. The attack unveiled on Tuesday also costs as little as $45,000 to carry out."

Be prepared.