Research problem: adaptive throttling of Tor clients by entry guards

Looking for a paper topic (or a thesis topic)? Here's a Tor research area that needs more attention. The short version is: if we prevent the really loud users from using too much of the Tor network, how much can it help?

We've instrumented Tor's entry relays so they can rate-limit connections from users, and we've instrumented the directory authorities so they can change the rate-limiting parameters globally across the network. Which parameter values improve performance for the Tor network as a whole? How should relays adapt their rate-limiting parameters based on their capacity and based on the network load they see, and what rate-limiting algorithms will work best?

We'd love to work with you to help answer these questions.

Background:

One of the reasons why Tor is slow is that some people use it for file-sharing or other high-volume transfers. That means if you want to get your instant message cell through, it sometimes needs to wait in line behind a pile of other cells — leading to high latency and, maybe even worse, highly variable latency.

One way to improve the situation is Can and Goldberg's "An Improved Algorithm for Tor Circuit Scheduling", to be presented next month at ACM CCS 2010 and already integrated into Tor as of 0.2.2.11-alpha. The idea is to track how many cells the relay has handled for each circuit lately, and give priority to cells from quieter circuits.

But while that puts some cells in front of others, it can only work so many miracles: if many cells have been placed in front of your cell it still has to wait, and the more overall load there is in the network, the more often that will happen.

Which leads to the research problem: if we work to keep the really loud flows off the network in the first place, how much can it help?

Tor 0.2.2.15-alpha lets you set the PerConnBWRate and PerConnBWBurst config options in your relay, to use token buckets to rate limit connections from non-relays. Tor 0.2.2.16-alpha added the capability for the directory authorities to broadcast network-wide token bucket parameters, so we can change how much throttling there is and then observe the results on the network.

So the first question is to model how this should work to improve performance at a single entry guard. Say we do periodic performance tests fetching files of size 50KB, 1MB, and 5MB using that relay as our first hop, and say the relay sets PerConnBWRate to 5KB/s and PerConnBWBurst to 2MB. That is, bursts of up to 2 megabytes on the connection are unthrottled, but after that it's squeezed to a long-term average of 5 kilobytes per second, until the flow lets up at which point the allowed burst slowly builds back up to 2MB. We would expect the 5MB tests to show horrible performance, since they'll need at least 3000/5=600 seconds to fetch the last 3MB of the file. We would expect the 50KB and 1MB tests to look better, though: if we're squeezing out the really big flows, there's more space for what's left.

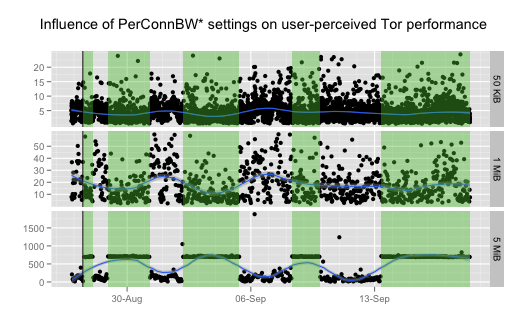

We actually performed this experiment, using Sebastian's relay fluxe3. You can see here his performance graphs over time. The black dots are individual fetches, the y axis is how many seconds it took to fetch the file, and the x axis is time (the green-shaded areas are when the feature is turned on). Don't pay too much attention to the blue smoothing line; it's probably not matching the actual distribution well.

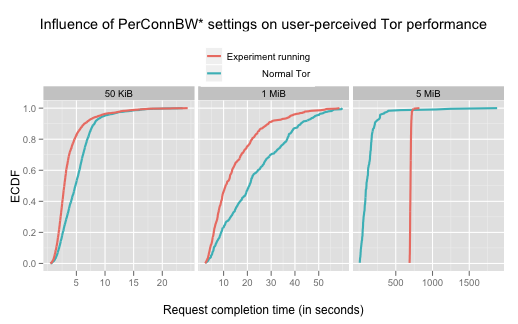

Here are the cumulative distribution functions for the same data. The performance gets significantly better both for the 50KB and the 1MB downloads, and as expected gets significantly worse for the 5MB downloads:

Here's the raw data: 50kb, 1mb, 5mb. See the Torperf howto for help interpreting it.

So far so good; we've done the proof of concept, and now we need a research group to step in, make it rigorous, and help tackle the real research questions.

The next question is: how well does this trick work under various conditions? It would seem that if there's plenty of spare bandwidth on the relay, it should have little effect. If we choose parameters that are too lenient, it also would have little effect. But choosing parameters that are too low will hurt normal web browsing users too. Are there rate and burst values that would cleanly separate web browsers from bulk downloaders? Will certain relays (that is, relays with certain characteristics) provide clearer performance improvements than others?

How often is it the case, for various relay capacities and various user loads, that at least one connection is being throttled? The CDFs appear to show improved performance spread out pretty evenly relative to download time. What statistics should we make relays track over time so we can get a better intuition about the load they're really seeing?

Now is where it gets tricky. If we're only thinking about one relay turning on this feature in a vacuum, then if that relay has enough capacity to handle all its flows, it should — no sense slowing down anybody if you can handle all the traffic. But instead think of the entire Tor network as a system: your Tor requests might also slow down because a loud Tor flow entered at some other relay and is colliding with yours somewhere along the path. So there's a reason to squeeze incoming connections even if you have enough capacity to handle them: to reduce the effects of bottlenecks elsewhere in the system. If all relays squeeze client connections, what changes in the performance test results do we expect to see for various rate limiting parameter values?

We have the capability of doing network-wide experiments to validate your theory, by putting the rate and burst in the hourly networkstatus consensus and letting relays pick it up from there. That is, you can modify the global network-wide parameters and then observe the effects in the network. Note that the experiment will be complicated by the fact that only relays running a sufficiently recent version of Tor will honor the parameters; that fraction should increase significantly when Tor 0.2.2.x becomes the new stable release (sometime in late 2010).

And finally, once you have a good intuition about how throttling flows at point X affects flow performance at point Y, we get to the really hard research questions. It's probably the case that no fixed network-wide rate limiting parameters are going to provide optimal behavior: the parameters ought to be a function of the load patterns on the network and of the capacity of the given relay. Should relays track the flows they've seen recently and adapt their throttling parameters over time? Is there some flow distribution that relays can learn the parameters of, such that we e.g. throttle the loudest 10% of flows? Can we approximate optimal behavior when relays base their parameters on only the local behavior they see, or do we need a more global view of the system to choose good parameters? And what does "optimal" mean here anyway? Or said another way, how much flexibility do we have to define optimal, or do the facts here railroad us into prefering certain definitions?

What are the anonymity implications of letting the behavior of user A influence the performance of user B, in the local-view case or the global-view case? We could imagine active attacks that aim to influence the parameters; can those impact anonymity?

As a nice side effect, this feature may provide a defense against Sambuddho's bandwidth-based link congestion attack, which relies on a sustained high-volume flow — if the attack ever gets precise enough that we can try out our defense and compare.

Here are some other constraints to keep in mind:

- We need the Burst to be big enough to handle directory fetches (up to a megabyte or two), or things will get really ugly because clients will get slowed down while bootstrapping.

- Mike's bandwidth authority measurement scripts send traffic over relays to discover their actual capacity relative to their advertised capacity. The larger the claimed capacity, the more they send — up to several megabytes for the fast relays. If relays throttle these bandwidth tests, the directory authorities will assign less traffic to them, making them appear to provide better performance when in fact they're just being less useful to the network. This is an example of a case where it would be easy to misinterpret your results if you don't understand the rest of the Tor system. A short-term workaround would be to turn the bandwidth authority Tors into relays so they don't get throttled.

- You'll want to do experiments on an established relay with the Guard flag, or it probably won't see many client connections except for clients fetching directory updates. Note that the longer a relay has had the Guard flag, the more users it will have attracted; but after a month this effect falls off.

- Thinking from an economic perspective, it may turn out that the performance for a given user doesn't actually get better if we throttle the high-volume users, yet we've still improved things. That is, if the available capacity of the Tor network improves and thus it is supporting more users, we've made a better Tor network even if we didn't make it faster for individual users. Fortunately, this feedback effect shouldn't happen for short-term experiments; but it's something to keep in mind for the long term.

- It sure would be nice if whatever rate limiting algorithm you recommend is efficient to calculate and update over time. Some relays are seeing tens of thousands of users, and can't afford much processing time on each.

Comments

Please note that the comment area below has been archived.

Hmmm... Seems to me that one

Hmmm... Seems to me that one could game the system by simply running a relay from the same IP as ones p2p program. Entry guards would then not throttle that connection. Compounding the issue, those added relays would likely run as non exit. While they would contribute to the network, they'd still suck up rarer and more valuable exit bandwidth without providing any in return. There would need to be safe guards against this behavior, otherwise the proposal would create an incentive to run relays for enhanced bittorrent service. That's probably not they sort of incentive torproject wants to create.

Additionally, what happens to the Iranian user, unable to run a relay, who's trying to upload their protest video to youtube? From where I'm sitting, I would attempt to isolate applications on circuits (via altered proposal 171), increase circuit lifetime, then simply continue to favor quieter circuits.

Relevant links:

http://archives.seul.org/or/dev/Jul-2010/msg00021.html

http://archives.seul.org/or/dev/Sep-2010/msg00007.html

Rate limiting is one

Rate limiting is one solution - but it would need to be a reasonable rate.

BTW: Why not advertise? Obviously, more people acting as Tor hosts would improve service, but I've never seen Tor actually advertised.

Letting the word slip out is one method. Pushing the word out is another. Rate limiting will help in cutting the traffic used by file sharing and CP downloads, but this can also effect the normal user too.

Why not get a few small ads in a few computer mags or even online.

We don't advertise by

We don't advertise by design. We've repeatedly watched a number of other circumvention tools make a huge press splash over the years. They talk about "for freedom" or "taking down the firewall" or something along these lines. Inevitably, they just gave the censors and the technology companies that supply them a reason to block them at all costs.

Arguably, the world knows about Tor already. We're an anonymity/privacy network first, circumvention second. A growing number of people use a one-hop proxy system to bypass the firewall, and then use tor over that to protect themselves. They realize the one-hop proxy can be run by the government or those friendly to the government to spy on those using it.

There's an arms race we're trying to have as slow as possible. Advertising and pushing Tor into the face of those that want to defeat us can only accelerate the arms race in our experience.

Hi, Thanks for that new

Hi,

Thanks for that new paper and research, i would participate with pleasure if i can help you with my relay and so give my stats and hope so continue help to improve the Tor network

Best Regards

SwissTorExit

please make this happen, I

please make this happen, I am so tried of slow Tor vs fast Tor years past. I don't think some people who want Warz and to download movies and porn should get to hold Tor hostage...so, please, with a cherry on top, make this happen and stop of those gawd darn file sharers.

I really like Tor and

I really like Tor and appreciate the work you put into it. That said, when are you going to start preventing Tor from building circuits with every node in the same city?

TOR has become useless not

TOR has become useless not because of speed issues but because every major site identifies TOR and effectively blocks it. TOR is shooting itself in the foot.

"Every major site" you say?

"Every major site" you say? Tor works wherever I take it. What can't you get through to? Twitter works, Facebook works, Google works...

Youtube doesn't work (enters

Youtube doesn't work (enters you into a practically infinite loop of CAPTCHA requests, till you give up).

Hotmail doesn't work (does the same thing as Youtube, but disconnects you after 5 CAPTCHA trial, despite typing the correct characters).

Gmail, BTW, identifies you coming from a TOR network and will let you register only if you provide a mobile phone number for a confirmation SMS. (did we say the Chinese government loves this?)

Wikipedia will not let you register via a TOR network...

Should I go on?

TOR is a wonderful idea (provided it's not used for illegal activities and/or piracy) but if all major websites identify TOR (those who have the resources to do so) and apply impractical usability measures against TOR users, what good is TOR for?

This is a known and

This is a known and intermittent problem; it does not mean that Google, Youtube, Hotmail, Yahoo, etc considers Tor to be spyware.

When you use Tor, you are sending queries through exit relays that are also shared by thousands of other users. Tor users typically see this message when many Tor users are querying Google in a short period of time. Google interprets the high volume of traffic from a single IP address (the exit relay you happened to pick) as somebody trying to "crawl" their website, so it slows down traffic from that IP address for a short time.

An alternate explanation is that Google tries to detect certain kinds of spyware or viruses that send distinctive queries to Google Search. It notes the IP addresses from which those queries are received (not realizing that they are Tor exit relays), and tries to warn any connections coming from those IP addresses that recent queries indicate an infection.

To our knowledge, Google is not doing anything intentionally specifically to deter or block Tor use. The error message about an infected machine should clear up again after a short time.

Torbutton 1.2.5 (released in mid 2010) detects Google captchas and can automatically redirect you to a more Tor-friendly search engine such as Ixquick or Bing.

Further, smarter sites already realize an IP address is not a person and have other methods to authenticate you. gmail doesn't always require a sms authorization through Tor. I've registered a few accounts using Tor through gmail in the past week successfully. Gmail is moving to two-factor authentication, one of those factors is sms.

Rather than complain about what Tor cannot do, provide specific steps, proven with research, that we can take to improve Tor.

phobos, thanks for your

phobos, thanks for your thoughtful reply, although it doesn't coincide with actual experience. Every TOR exit is easily identified as such (see http://www.geoiptool.com/ for example) and as long as this is the case, TOR cannot be fully effective.

I can't imagine Google not doing something against TOR as one of TOR's declared goals is to prevent traffic analysis. Why would Google cooperate with something on which its entire business model is based???

Again, if you can make TOR exits not identifiable as such, you may have a useful tool. Otherwise, TOR only works with small websites who are unable to analyse trafic effectively anyway. Look at http://www.geoiptool.com/ via TOR and see what I mean.

The fact is Tor works for

The fact is Tor works for SOME and not others. Not everybody can use it effectively and there are things for those working on Tor to work on. All things considered none of the problems you've mentioned are being ignored. As has been stated the the Google redirect to other search engines is one solution. It may not be ideal. It does work for those who really need Tor.

Quit lying and appeasing the

Quit lying and appeasing the press! Let's break it down shall we...

No one above mentioned that google virus captcha, no one cares

about it, NEWNYM, done.

It is NOT possible to sign up for Gmail or post on CraigsList personals.

Both require SMS. OkCupid refuses both accounts and traffic. Facebook

routinely pops up account verification if it see's you 'traveling'. PlentyOfFish deletes the accounts of travelers. Gmail pops up "give

us your SMS" on old accounts. I don't even remember what MySpace

does. And so on, and on, need I continue?, seriously, the list is long...

Gmail [google] *is*/*claims to be* the "smartest site", and they're broken, how ironic is that?!

Gmail especially doesn't need two factor auth, they've got SSL for the

parts that matter. And we've got secure computers. If I wanted two

factor, I'd ask them for a kilo-login's worth of OPIE at sign up and

print it out, or give them a pubkey. Give ME options, don't stuff

crap ones down our throats.

And users have pen and paper air gap for their damn forgotten

and weak internet passwords.

Sites are doing SMS primarily to database people [google is evil

remember] and secondly to raise costs of abuse.

Do NOT be the fools and think otherwise. Don't believe me? Look at the

order in which the first SMS's came out. First, toss SMS wall up. Next the 'protecting malfeasance' explanation, but that was evidenciary and thus

poor PR. So third, it's now the 'we are giving you cool new two factor/recovery' whitewash. Sly little twist, same BS.

You know why these sites are able to 100% detect and block Tor instantly,

I'd bet because TorProject provides that damn online tordnesexitlist

checker. Care to cough up the query logs on that???

Yes, they could run a node and scrape the descriptor list. But at least

there'd be a race condition whereby a legitimate user could get in on

new exits.

And sure, they could grok the node archives [oops] to nuke prior

accounts.

But some of these sites are run by such clueless morons (CL for example)

that they'd never get past the shebang in binsh to do it.

"gmail doesn't always require a sms authorization through Tor. I've registered a few accounts using Tor through gmail in the past week successfully"

Ba.lone.y, I've been trying to sign up for CL and gmail ever since SMS

went up nearly a year ago. I gave up. Care to document your exits?

And for all the appeasement and tools Tor gives to these sites,

Tor should at least be able to get corporate confirmation from

them regarding: "Yep, we suck, we're blocking Tor, lol."

Sorry for the rant, but I'm getting physically ill with all these major

sites pissing all over legitimate customers and users like us. Every

month some site goes tits up for Tor, it's ridiculous and totally

unnecessary.

Specific improvements? Tor's CEO can march into these site's CEO's

offices and have a constructive, open, educative, chat.

Do it on the record in panel at a con for all I care.

The *chan/anonymous/i crowd is simply not that

much load on their abuse, smartcoder and goodidea departments to

warrant nuking all the other legitimate users of Tor along with it.

And they're the one who are going to buy the prepaid cells just to

screw around.

So get back to nuking the bad accounts, not Tor.

Oh well, thanks for Tor anyways. Braindead corporate policies

aren't really Tor's fault.

If possible, could you

If possible, could you provide the following: a plot of time elapsed between cells (i.e. differences in timestamps), over time? There may be a way to define "loud" using simple machine learning at the relay. Thanks. An interesting problem.

Which cells? You mean

Which cells? You mean showing the distribution of when we send cells for a given circuit? Or for multiple circuits? Or for TLS connections (which multiplex circuits)?

Many of these are possible, but I need to know more about what you actually planned to look at before I can guess what data would help you most.

Sorry, I later realized it's

Sorry, I later realized it's probably not the simplest solution...

But what I meant was cell as in "Tor packets." Whatever a unit of traffic is from the point of view of the relay, inbound -- and for individual circuits (this part I realized only now).

So, in a test, you could have a relay set up just for one test client. That client generates both types of traffic simultaneously, and the relay just logs time differences between cells (for each circuit).

A high number of short times between cells/packets would indicate a sustained transfer, i.e. "loud." You can distinguish between loud and the rest by this measure of average time between cells. But you need a threshold, and this can be determined using simple machine learning.

So you end up with a plot of that delta vs time, for each circuit. Then superimpose them.

From the point of view of the relay: "In the past hour or so, 'loud' connections average x microseconds between inbound cells/packets, while everyone else averages y. So in the next hour, I will give traffic averaging less than (x+y)/2 a higher priority." The x and y are easy enough to determine automatically at each individual relay.

I hope that makes sense.

Well, i don't agree, depend

Well, i don't agree, depend of where you go, but i found Tor less blocked as a year ago, it's seem that's is better understand by some site ...

For my part i am quite never blocked and can use it on any site i need.

So keep your great work :)

SwissTorExit

^^^ "every major site"?

^^^ "every major site"? Huh? I have not those problems on major sites I visit. You should contact those in charge of site you are referring to and explain Tor to them and why they should allow some scheme for Tor users (like SASL for IRC, or what Tor folks proposed for Wikipeida editing by Tor users).

And it's "Tor", not "TOR".

Hats of to TOR for providing

Hats of to TOR for providing an uncensored gateway to the internet, it is just a matter of time, I believe, that TOR's performance and outreach is going to extend manifold. I hope the people funding TOR and those actually involved in research and development of TOR may always get enough financial, moral and research support that they need to keep TOR up and running. cheers

How about researching ways

How about researching ways to best implement padding/morphing to prevent entry node/ISP content fingerprinting attacks using traffic classifiers set to compensate for Tor's packet sizes? I doubt those Chinese dissidents are going to be much concerned with network speed when they're lined up in front of a firing squad.

The two problems are almost

The two problems are almost identical. A method that successfully prioritizes traffic as described can be adapted to what you describe.

It can? That would surprise

It can? That would surprise me. Sounds like you have a really insightful paper waiting to be written.

My first reason for being surprised is that website fingerprinting should be able to work on even quite small traffic flows, like 50k or 100k. Whereas the client throttling mentioned in the blog post should ignore all flows under some much higher threshold. Unless I'm wrong.

The trick to all these writeups is walking the reader through enough context with respect to the rest of the research field that you can answer all the "but have you thought about foo?" questions.

Yes, I suspect (as Herrmann,

Yes, I suspect (as Herrmann, Wendolsky, and Federrath have confirmed) that packet/cell frequencies tell all -- whether the observer is a well-meaning entry guard looking to differentiate between traffic patterns, or an attacker matching fingerprints.

Bayesian analysis gives extremely high accuracy but is computationally intensive, much more than what is needed. I also look forward to papers on the subject, but will leave the (public) research to the experts. ;)

I agree: that's another good

I agree: that's another good research topic that should be tackled.

See the list at https://www.torproject.org/research.html.en#Ideas

The website fingerprinting question has been on the research list for years. I've peddled it to a wide variety of researchers. They know about it. We've funded research on it ourselves.

It turns out that getting useful results on the topic is harder than it seems like it should be. Please go out there and prove us wrong.

But if your logic is "you must ignore x, because there is a topic y that matters a lot", that's going to make it tough to get any work done around here. :)

ok i'm curious

ok i'm curious

Thank you for the step by

Thank you for the step by step tutorial, but unfortunately I was unable to totally remove it. I even made restart to my computer, but it's still appears to my computer. I made little research and found this Google redirect virus removal, but it costs money. Should I buy it or not? What's your opinion about that?