Mission Improbable: Hardening Android for Security And Privacy

Updates: See the Changes section for a list of changes since initial posting.

After a long wait, the Tor project is happy to announce a refresh of our Tor-enabled Android phone prototype.

This prototype is meant to show a possible direction for Tor on mobile. While I use it myself for my personal communications, it has some rough edges, and installation and update will require familiarity with Linux.

The prototype is also meant to show that it is still possible to replace and modify your mobile phone's operating system while retaining verified boot security - though only just barely. The Android ecosystem is moving very fast, and in this rapid development, we are concerned that the freedom of users to use, study, share, and improve the operating system software on their phones is being threatened. If we lose these freedoms on mobile, we may never get them back. This is especially troubling as mobile access to the Internet becomes the primary form of Internet usage worldwide.

Quick Recap

We are trying to demonstrate that it is possible to build a phone that respects user choice and freedom, vastly reduces vulnerability surface, and sets a direction for the ecosystem with respect to how to meet the needs of high-security users. Obviously this is a large task. Just as with our earlier prototype, we are relying on suggestions and support from the wider community.

Help from the Community

When we released our first prototype, the Android community exceeded our wildest expectations with respect to their excitement and contributions. The comments on our initial blog post were filled with helpful suggestions.

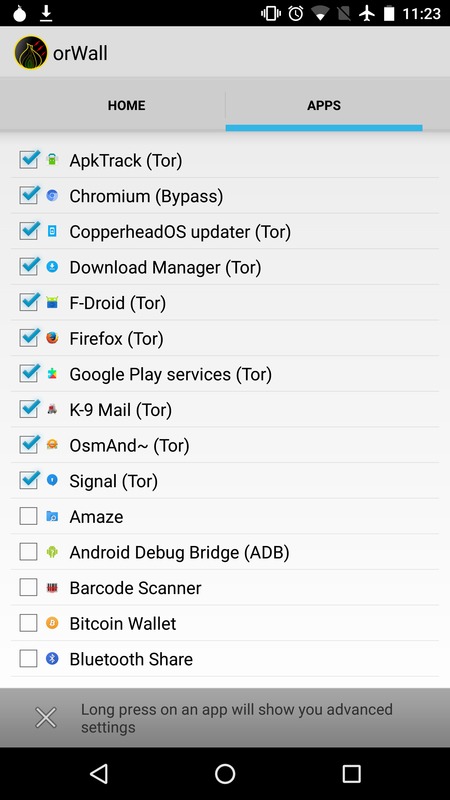

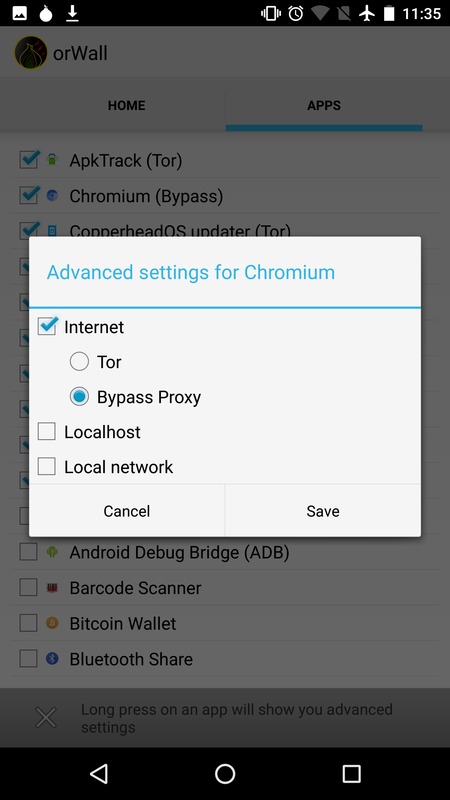

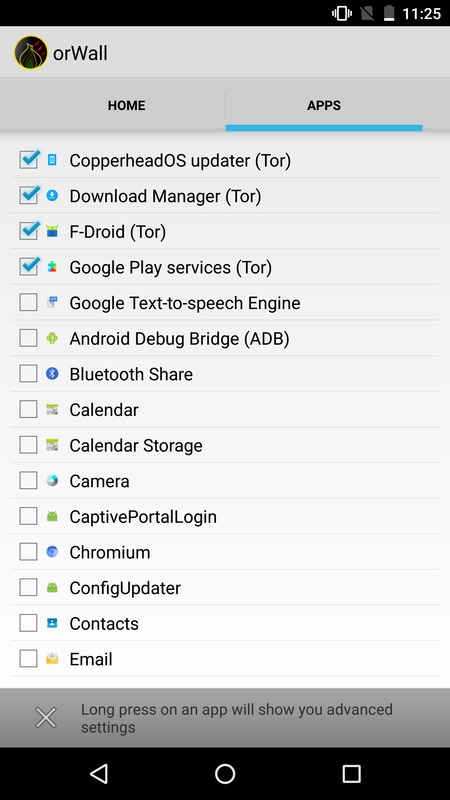

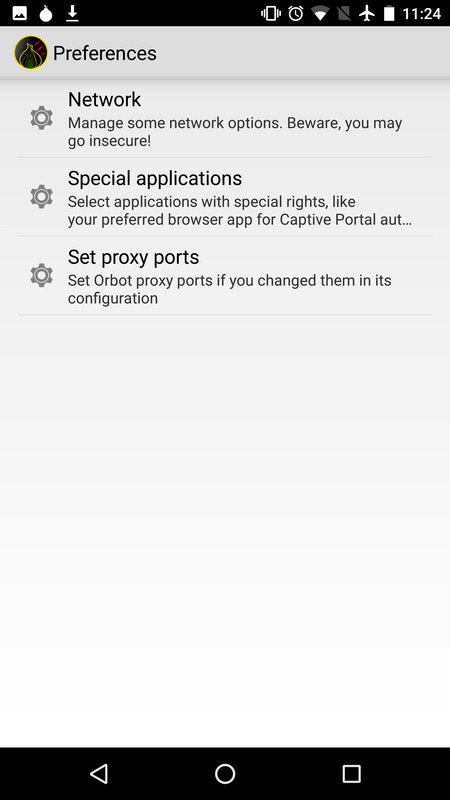

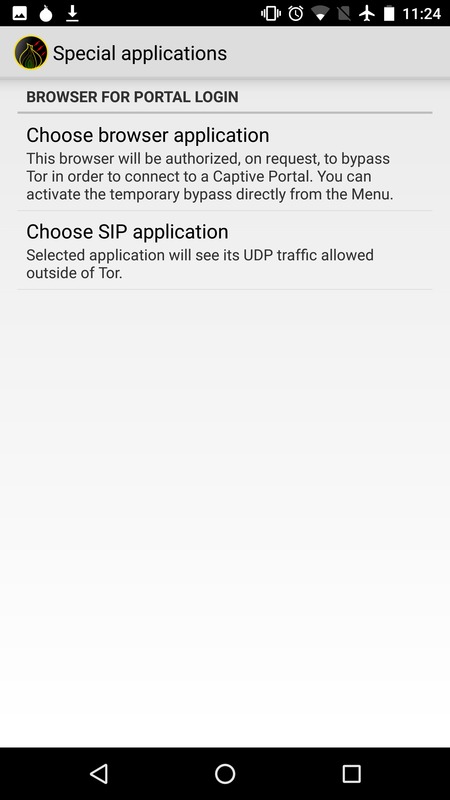

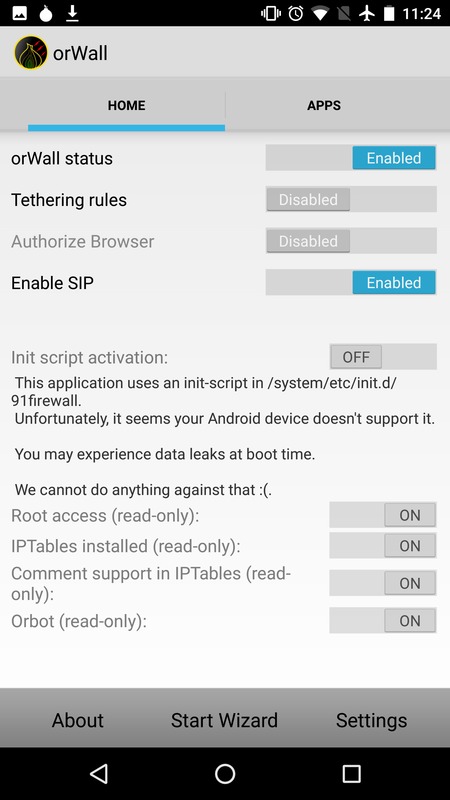

Soon after that post went up, Cédric Jeanneret took my Droidwall scripts and adapted them into the very nice OrWall, which is exactly how we think a Tor-enabled phone should work in general. Users should have full control over what information applications can access on their phones, including Internet access, and have control over how that Internet access happens. OrWall provides the networking component of this access control. It allows the user to choose which apps route through Tor, which route through non-Tor, and which can't access the Internet at all. It also has an option to let a specific Voice over IP app, like Signal, bypass Tor for the UDP voice data channel, while still sending call setup information over Tor.

At around the time that our blog post went up, the Copperhead project began producing hardened builds of Android. The hardening features make it more difficult to exploit Android vulnerabilities, and also provides WiFi MAC address randomization, so that it is no longer trivial to track devices using this information.

Copperhead is also the only Android ROM that supports verified boot, which prevents exploits from modifying the boot, system, recovery, and vendor device partitions. Coppherhead has also extended this protection by preventing system applications from being overridden by Google Play Store apps, or from writing bytecode to writable partitions (where it could be modified and infected). This makes Copperhead an excellent choice for our base system.

The Copperhead Tor Phone Prototype

Upon the foundation of Copperhead, Orbot, Orwall, F-Droid, and other community contributions, we have built an installation process that installs a new Copperhead phone with Orbot, OrWall, SuperUser, Google Play, and MyAppList with a list of recommended apps from F-Droid.

We require SuperUser and OrWall instead of using the VPN APIs because the Android VPN APIs are still not as reliable as a firewall in terms of preventing leaks. Without a firewall-based solution, the VPN can leak at boot, or if Orbot is killed or crashes. Additionally, DNS leaks outside of Tor still occur with the VPN APIs on some systems.

We provide Google Play primarily because Signal still requires it, but also because some users probably also want apps from the Play Store. You do not need a Google account to use Signal, but then you need to download the Signal android package and sideload it manually (via adb install).

The need to install these components to the system partition means that we must re-sign the Copperhead image and updates if we want to keep the ability to have system integrity from Verified Boot.

Thankfully, the Nexus Devices supported by Copperhead allow the use of user-generated keys. The installation process simply takes a Copperhead image, installs our additional apps, and signs it with the new keys.

Systemic Threats to Software Freedom

Unfortunately, not only is Copperhead the only Android rebuild that supports Verified Boot, but the Google Nexus/Pixel hardware is the only Android hardware that allows the user to install their own keys to retain both the ability to modify the device, as well as have the filesystem security provided by verified boot.

This, combined with Google's increasing hostility towards Android as a fully Open Source platform, as well as the difficulty for external entities to keep up with Android's surprise release and opaque development processes, means that the ability for end-users to use, study, share, and improve the Android system are all in great jeopardy.

This all means that the Android platform is effectively moving to a "Look but don't touch" Shared Source model that Microsoft tried in the early 2000s. However, instead of being explicit about this, Google appears to be doing it surreptitiously. It is a very deeply disturbing trend.

It is unfortunate that Google seems to see locking down Android as the only solution to the fragmentation and resulting insecurity of the Android platform. We believe that more transparent development and release processes, along with deals for longer device firmware support from SoC vendors, would go a long way to ensuring that it is easier for good OEM players to stay up to date. Simply moving more components to Google Play, even though it will keep those components up to date, does not solve the systemic problem that there are still no OEM incentives to update the base system. Users of old AOSP base systems will always be vulnerable to library, daemon, and operating system issues. Simply giving them slightly more up to date apps is a bandaid that both reduces freedom and does not solve the root security problems. Moreover, as more components and apps are moved to closed source versions, Google is reducing its ability to resist the demand that backdoors be introduced. It is much harder to backdoor an open source component (especially with reproducible builds and binary transparency) than a closed source one.

If Google Play is to be used as a source of leverage to solve this problem, a far better approach would be to use it as a pressure point to mandate that OEMs keep their base system updated. If they fail to do so, their users will begin to lose Google Play functionality, with proper warning that notifies them that their vendor is not honoring their support agreement. In a more extreme version, the Android SDK itself could have compiled code that degrades app functionality or disables apps entirely when the base system becomes outdated.

Another option would be to change the license of AOSP itself to require that any parties that distribute binaries of the base system must provide updates to all devices for some minimum period of time. That would create a legal avenue for class-action lawsuits or other legal action against OEMs that make "fire and forget" devices that leave their users vulnerable, and endanger the Internet itself.

While extreme, both of these options would be preferable to completely giving up on free and open computing for the future of the Internet. Google should be competing on overall Google account integration experience, security, app selection, and media store features. They should use their competitive position to encourage/enforce good OEM behavior, not to create barriers and bandaids that end up enabling yet more fragmentation due to out of date (and insecure) devices.

It is for this reason that we believe that projects like Copperhead are incredibly important to support. Once we lose these freedoms on mobile, we may never get them back. It is especially troubling to imagine a future where mobile access to the Internet is the primary form of Internet usage, and for that usage, all users are forced to choose between having either security or freedom.

Hardware Choice

The hardware for this prototype is the Google Nexus 6P. While we would prefer to support lower end models for low income demographics, only the Nexus and Pixel lines support Verified Boot with user-controlled keys. We are not aware of any other models that allow this, but we would love to hear if there are any that do.

In theory, installation should work for any of the devices supported by Copperhead, but updating the device will require the addition of an updater-script and an adaptation of the releasetools.py for that device, to convert the radio and bootloader images to the OTA update format.

If you are not allergic to buying hardware online, we highly recommend that you order them from the Copperhead store. The devices are shipped with tamper-evident security tape, for what it's worth. Otherwise, if you're lucky, you might still be able to find a 6P at your local electronics retail store. Please consider donating to Copperhead anyway. The project is doing everything right, and could use your support.

Hopefully, we can add support for the newer Pixel devices as soon as AOSP (and Copperhead) supports them, too.

Installation

Before you dive in, remember that this is a prototype, and you will need to be familiar with Linux.

With the proper prerequisites, installation should be as simple as checking out the Mission Improbable git repository, and downloading a Copperhead factory image for your device.

The run_all.sh script should walk you through a series of steps, printing out instructions for unlocking the phone and flashing the system. Please read the instructions in the repository for full installation details.

The very first device boot after installation will take a while, so be patient. During this boot, you should note the fingerprint of your key on the yellow boot splash screen. That fingerprint is what authenticates the use of your key and the rest of the boot process.

Once the system is booted, after you have given Google Play Services the Location and Storage permissions (as per the instructions printed by the script), make sure you set the Date and Time accurately, or Orbot will not be able to connect to the Tor Network.

Then, you can start Orbot, and allow F-Droid, Download Manager, the Copperhead updater, Google Play Services (if you want to use Signal), and any other apps you want to access the network.

NOTE: To keep Orbot up to date, you will have to go into F-Droid Repositories option, and click Guardian Project Official Releases.

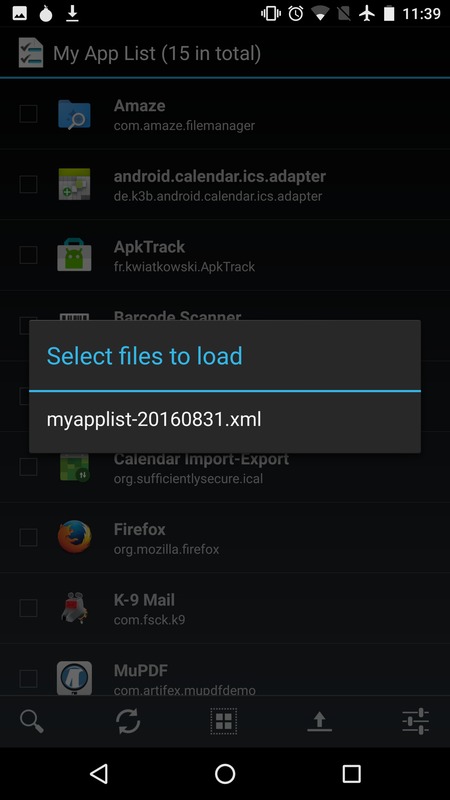

Installation: F-Droid apps

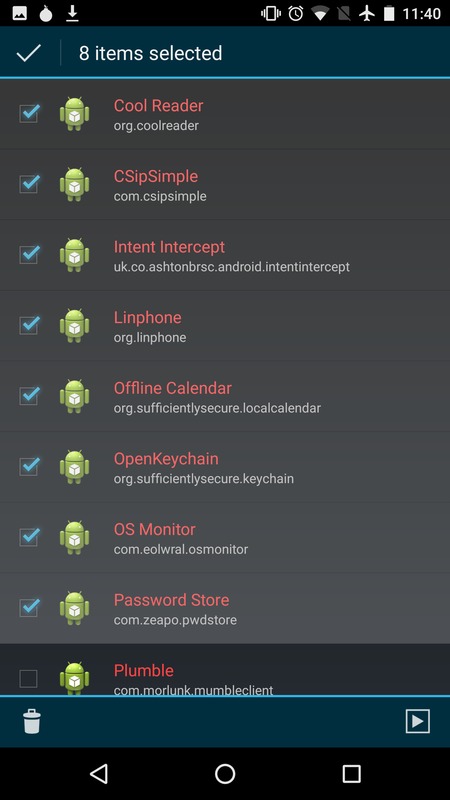

Once you have networking and F-Droid working, you can use MyAppList to install apps from F-Droid. Our installation provides a list of useful apps for MyAppList. The MyAppsList app will allow you to select the subset you want, and install those apps in succession by invoking F-Droid. Start this process by clicking on the upward arrow at the bottom right of the screen:

Alternately, you can add links to additional F-Droid packages in the apk url list prior to running the installation, and they will be downloaded and installed during run_all.sh.

NOTE: Do not update OrWall past 1.1.0 via F-Droid until issue 121 is fixed, or networking will break.

Installation: Signal

Signal is one of the most useful communications applications to have on your phone. Unfortunately, despite being open source itself, Signal is not included in F-Droid, for historical reasons. Near as we can tell, most of the issues behind the argument have actually been since resolved. Now that Signal is reproducible, we see no reason why it can't be included in some F-Droid repo, if not the F-Droid repo, so long as it is the same Signal with the same key. It is unfortunate to see so much disagreement over this point, though. Even if Signal won't make the criterion for the official F-Droid repo (or wherever that tirefire of a flamewar is at right now), we wish that at the very least it could meet the criterion for an alternate "Non-Free" repo, much like the Debian project provides. Nothing is preventing the redistribution of the official Signal apk.

For now, if you do not wish to use a Google account with Google Play, it is possible to download the Signal apks from one of the apk mirror sites (such as APK4fun, apkdot.com, or apkplz.com). To ensure that you have the official Signal apk, perform the following:

- Download the apk.

- Unzip the apk with unzip org.thoughtcrime.securesms.apk

- Verify that the signing key is the official key with keytool -printcert -file META-INF/CERT.RSA

- You should see a line with SHA256: 29:F3:4E:5F:27:F2:11:B4:24:BC:5B:F9:D6:71:62:C0 EA:FB:A2:DA:35:AF:35:C1:64:16:FC:44:62:76:BA:26

- Make sure that fingerprint matches (the space was added for formatting).

- Verify that the contents of that APK are properly signed by that cert with: jarsigner -verify org.thoughtcrime.securesms.apk. You should see jar verified printed out.

Then, you can install the Signal APK via adb with adb install org.thoughtcrime.securesms.apk. You can verify you're up to date with the version in the app store with ApkTrack.

For voice calls to work, select Signal as the SIP application in OrWall, and allow SIP access.

Updates

Because Verified Boot ensures filesystem integrity at the device block level, and because we modify the root and system filesystems, normal over the air updates will not work. The fact that we use different device keys will prevent the official updates from installing at all, but even if they did, they would remove the installation of Google Play, SuperUser, and the OrWall initial firewall script.

When the phone notifies you of an update, you should instead download the latest Copperhead factory image to the mission-improbable working directory, and use update.sh to convert it into a signed update zip that will get sideloaded and installed by the recovery. You need to have the same keys from the installation in the keys subdirectory.

The update.sh script should walk you through this process.

Updates may also reset the system clock, which must be accurate for Orbot to connect to the Tor network. If this happens, you may need to reset the clock manually under Date and Time Settings

Usage

I use this prototype for all of my personal communications - Email, Signal, XMPP+OTR, Mumble, offline maps and directions in OSMAnd, taking pictures, and reading news and books. I use Intent Intercept to avoid accidentally clicking on links, and to avoid surprising cross-app launching behavior.

For Internet access, I personally use a secondary phone that acts as a router for this phone while it is in airplane mode. That phone has an app store and I use it for less trusted, non-private applications, and for emergency situations should a bug with the device prevent it from functioning properly. However, it is also possible to use a cheap wifi cell router, or simply use the actual cell capabilities on the phone itself. In that case, you may want to look into CSipSimple, and a VoIP provider, but see the Future Work section about potential snags with using SIP and Signal at the same time.

I also often use Google Voice or SIP numbers instead of the number of my actual phone's SIM card just as a general protection measure. I give people this number instead of the phone number of my actual cell device, to prevent remote baseband exploits and other location tracking attacks from being trivial to pull off from a distance. This is a trade-off, though, as you are trusting the VoIP provider with your voice data, and on top of this, many of them do not support encryption for call signaling or voice data, and fewer still support SMS.

For situations where using the cell network at all is either undesirable or impossible (perhaps because it is disabled due to civil unrest), the mesh network messaging app Rumble shows a lot of promise. It supports both public and encrypted groups in a Twitter-like interface run over either a wifi or bluetooth ad-hoc mesh network. It could use some attention.

Future Work

Like the last post on the topic, this prototype obviously has a lot of unfinished pieces and unpolished corners. We've made a lot of progress as a community on many of the future work items from that last post, but many still remain.

Future work: More Device Support

As mentioned above, installation should work on all devices that Copperhead supports out of the box. However, updates require the addition of an updater-script and an adaptation of the releasetools.py for that device, to convert the radio and bootloader images to the OTA update format.

Future Work: MicroG support

Instead of Google Play Services, it might be nice to provide the Open Source MicroG replacements. This requires some hackery to spoof the Google Play Service Signature field, though. Unfortunately, this method creates a permission that any app can request to spoof signatures for any service. We'd be much happier about this if we could find a way for MicroG to be the only app to be able to spoof permissions, and only for the Google services it was replacing. This may be as simple as hardcoding those app ids in an updated version of one of these patches.

Future Work: Netfilter API (or better VPN APIs)

Back in the WhisperCore days, Moxie wrote a Netfilter module using libiptc that enabled apps to edit iptables rules if they had permissions for it. This would eliminate the need for iptables shell callouts for using OrWall, would be more stable and less leaky than the current VPN APIs, and would eliminate the need to have root access on the device (which is additional vulnerability surface). That API needs to be dusted off and updated for the Copperhead compatibility, and then Orwall would need to be updated to use it, if present.

Alternatively, the VPN API could be used, if there were ways to prevent leaks at boot, DNS leaks, and leaks if the app is killed or crashes. We'd also want the ability to control specific app network access, and allow bypass of UDP for VoIP apps.

Future Work: Fewer Binary Blobs

There are unfortunately quite a few binary blobs extracted from the Copperhead build tree in the repository. They are enumerated in the README. This was done for expedience. Building some of those components outside of the android build tree is fairly difficult. We would happily accept patches for this, or for replacement tools.

Future Work: F-Droid auto-updates, crash reporting, and install count analytics

These requests come from Moxie. Having these would make him much happier about F-Droid Signal installs.

It turns out that F-Droid supports full auto-updates with the Priviledged Extension, which Copperhead is working on including.

Future Work: Build Reproducibility

Copperhead itself is not yet built reproducibly. It's our opinion that this is the AOSP's responsibility, though. If it's not the core team at Google, they should at least fund Copperhead or some other entity to work on it for them. Reproducible builds should be an organizational priority for all software companies. Moreover, in combination with free software, they are an excellent deterrent against backdoors.

In this brave new world, even if we can trust that the NSA won't be ordered to attack American companies to insert backdoors, deteriorating relationships with China and other state actors may mean that their incentives to hold back on such attacks will be greatly reduced. Closed source components can also benefit from reproducible builds, since compromising multiple build systems/build teams is inherently harder than compromising just one.

Future Work: Orbot Stability

Unfortunately, the stability of Orbot itself still leaves a lot to be desired. It is fairly fragile to network disconnects. It often becomes stuck in states that require you to go into the Android Settings for Apps, and then Force Stop Orbot in order for it to be able to reconnect properly. The startup UI is also fragile to network connectivity.

Worse: If you tap the start button either too hard or multiple times while the network is disconnected or while the phone's clock is out of sync, Orbot can become confused and say that it is connected when it is not. Luckily, because the Tor network access security is enforce by Orwall (and the Android kernel), instabilities in Orbot do not risk Tor leaks.

Future Work: Backups and Remote Wipe

Unfortunately, backups are an unsolved problem. In theory, adb backup -all should work, but even the latest adb version from the official Android SDK appears to only backup and restore partial data. Apparently this is due to adb obeying manifest restrictions on apps that request not to be backed up. For the purposes of full device backup, it would be nice to have an adb version that really backed up everything.

Instead, I use the export feature of K-9 Mail, Contacts, and the Calendar Import-Export app to export that data to /sdcard, and then adb pull /sdcard. It would be nice to have an end-to-end encrypted remote backup app, though. Flock had promise, but was unfortunately discontinued.

Similarly, if a phone is lost, it would be nice to have a cryptographically secure remote wipe feature.

Future Work: Baseband Analysis (and Isolation)

Until phones with auditable baseband isolation are available (the Neo900 looks like a promising candidate), the baseband remains a problem on all of these phones. It is unknown if vulnerabilities or backdoors in the baseband can turn on the mic, make silent calls, or access device memory. Using a portable hotspot or secondary insecure phone is one option for now, but it is still unknown if the baseband is fully disabled in airplane mode. In the previous post, commenters recommended wiping the baseband, but on most phones, this seems to also disable GPS.

It would be useful to audit whether airplane mode fully disables the baseband using either OpenBTS, OsmocommBB, or a custom hardware monitoring device.

Future Work: Wifi AP Scanning Prevention

Copperhead may randomize the MAC address, but it is quite likely that it still tries to connect to configured APs, even if they are not there (see these two XDA threads). This can reveal information about your home and work networks, and any other networks you have configured.

There is a Wifi Privacy Police App in F-Droid, and Smarter WiFi may be other options, but we have not yet had time to audit/test either. Any reports would be useful here.

Future Work: Port Tor Browser to Android

The Guardian Project is undertaking a port of Tor Browser to Android as part of their OrFox project. This port is still incomplete, however. The Tor Project is working on obtaining funding to bring it on par with the desktop Tor Browser.

Future Work: Better SIP Support

Right now, it is difficult to use two or more SIP clients in OrWall. You basically have to switch between them in the settings, which is also fragile and error prone. It would be ideal if OrWall allowed multiple SIP apps to be selected.

Additionally, SIP providers and SIP clients have very poor support for TLS and SRTP encryption for call setup and voice data. I could find only two such providers that advertised this support, but I was unable to actually get TLS and SRTP working with CSipSimple or LinPhone for either of them.

Future Work: Installation and full OTA updates without Linux

In order for this to become a real end-user phone, we need to remove the requirement to use Linux in order to install and update it. Unfortunately, this is tricky. Technically, Google Play can't be distributed in a full Android firmware, so we'd have to get special approval for that. Alternatively, we could make the default install use MicroG, as above. In either case, it should just be a matter of taking the official Copperhead builds, modifying them, changing the update URL, and shipping those devices with Google Play/MicroG and the new OTA location. Copperhead or Tor could easily support multiple device install configurations this way without needing to rebuild everything for each one. So legal issues aside, users could easily have their choice of MicroG, Google Play, or neither.

Personally, I think the demand is higher for some level of Google account integration functionality than what MicroG provides, so it would be nice to find some way to make that work. But there are solid reasons for avoiding the use of a Google account (such as Google's mistreatment of Tor users, the unavailability of Google in certain areas of the world due to censorship of Google, and the technical capability of Google Play to send targeted backdoored versions of apps to specific accounts).

Future Work: Better Boot Key Representation/Authentication

The truncated fingerprint is not the best way to present a key to the user. It is both too short for security, and too hard to read. It would be better to use something like the SSH Randomart representation, or some other visual representation that encodes a cryptographically strong version of the key fingerprint, and asks the user to click through it to boot. Though obviously, if this boot process can also be modified, this may be insufficient.

Future Work: Faster GPS Lock

The GPS on these devices is device-only by default, which can mean it is very slow. It would be useful to find out if µg UnifiedNlp can help, and which of its backends are privacy preserving enough to recommend/enable by default.

Future Work: Sensor Management/Removal

As pointed out in great detail in one of the comments below, these devices have a large number of sensors on them that can be used to create side channels, gather information about the environment, and send it back. The original Mission Impossible post went into quite a bit of detail about how to remove the microphone from the device. This time around, I focused on software security. But like the commentor suggested, you can still go down the hardware modding rabbithole if you like. Just search YouTube for teardown nexus 6P, or similar.

Like the last post, this post will likely be updated for a while based on community feedback. Here is the list of those changes so far.

- Added information about secondary SIP/VoIP usage in the Usage section and the Future Work sections.

- Added a warning not to upgrade OrWall until Issue 121 is fixed.

- Describe how we could remove the Linux requirement and have OTA updates, as a Future Work item.

- Remind users to check their key fingerprint at installation and boot, and point out in the Future Work section that this UI could be better.

- Mention the Neo900 in the Future Work: Baseband Isolation section

- Wow, the Signal vs F-Droid issue is a stupid hot mess. Can't we all just get along and share the software? Don't make me sing the RMS song, people... I'll do it...

- Added a note that you need the Guardian Project F-Droid repo to update Orbot.

- Add a thought to the Systemic Threats to Software Freedom section about using licensing to enforce the update requirement in order to use the AOSP.

- Mention ApkTrack for monitoring for Signal updates, and Intent Intercept for avoiding risky clicks.

- Mention alternate location providers as Future Work, and that we need to pick a decent backend.

- Link to Conversations and some other apps in the usage section. Also add some other links here and there.

- Mention that Date and Time must be set correctly for Orbot to connect to the network.

- Added a link to Moxie's netfilter code to the Future Work section, should anyone want to try to dust it off and get it working with Orwall.

- Use keytool instead of sha256sum to verify the Signal key's fingerprint. The CERT.RSA file is not stable across versions.

- The latest Orbot 15.2.0-rc8 still has issues claiming that it is connected when it is not. This is easiest to observe if the system clock is wrong, but it can also happen on network disconnects.

- Add a Future Work section for sensor management/removal

Future Work: Disk Encryption via TPM or Clever Hacks

Unfortunately, even disk encryption and a secure recovery firmware is not enough to fully defend against an adversary with an extended period of physical access to your device.

Cold Boot Attacks are still very much a reality against any form of disk encryption, and the best way to eliminate them is through hardware-assisted secure key storage, such as through a TPM chip on the device itself.

It may also be possible to mitigate these attacks by placing key material in SRAM memory locations that will be overwritten as part of the ARM boot process. If these physical memory locations are stable (and for ARM systems that use the SoC SRAM to boot, they will be), rebooting the device to extract key material will always end up overwriting it. Similar ARM CPU-based encryption defenses have also been explored in the research literature.

-->

Comments

Please note that the comment area below has been archived.

I am absolutely in favor of

I am absolutely in favor of a more open development from google accepting patches to manufacturers handing in drivers for the linux kernel which android is based on to the community enhancing the operating system with fm support, a cypher indicator for the network and so on, google seems to ignore some of the really cool things people like to see and have partially gotten to work.

Having updates only partially in the play service and system apps instead of the core operating system is sad.

About the cipher

About the cipher indicator... wasn't everyone advused to avoid ecommerce when the EXPORT cipher downgrade attack for HTTPS was found?

Wouldn't it be prudent to advise everyone against any phone services related to commerce or social security numbers until the EXPORT cipher downgrade attack in cellular connections is fixed? Will the NSA even allow this gaping hole to be fixed? Isn't it their mission to make America as insecure to attackers as possible?

wow! thank you so very

wow! thank you so very much for this excellent update! The original post was great guidance and extremely helpful and this update makes it even better.

I'vehad a bit of a play with

I'vehad a bit of a play with pEp mail. Looks to be a hardened version of k9 with gpg rolled in p≡p Mail (Read and write encrypted e-mails) - https://f-droid.org/app/pep.android.k9

Projects under active development.

CSipSimple doesn't seem to

CSipSimple doesn't seem to be actively developed. Have you evaluated Linphone as an alternative?

LinPhone is easier to

LinPhone is easier to configure in some ways (especially for encrypted VoIP providers), but I found it to be a little unstable, and also had a more confusing/cumbersome UI to actually use.

CSIPSimple receives regular

CSIPSimple receives regular updates on android.

Would Google accept a patch

Would Google accept a patch to fix VPN?

It seems silly for each ROM to have to choose between maintaining its own VPN implementation or have a broken VPN that offefs nothing but a false sense of security.

Will NSA allow Google to offer secure VPN? I'm suprised NSA hasn't banned the Rust language, and/or banned HTTPS.

NSA has succeeded in

NSA has succeeded in damaging America's national security far more than ISIS ever could. A real fifth column, the NSA. No agency hates America, hates liberty, hates free trade, or hates democracy more.

Plus one. NSA is the enemy

Plus one.

NSA is the enemy of every person on the planet--- US persons above all.

FBI is catching up fast, however.

How wonderful of you, US Federal government.

_______________________ <

_______________________

< Independency_for_COW! >

-----------------------

\ ^__^

\ (oo)\_______

(__)\ )\/\

||----w |

|| ||

Why would they ban Rust?

Why would they ban Rust?

How can VPN ever be made

How can VPN ever be made secure when all VPN providers can cooperate with any adversaries they like?

I'm very happy to see this

I'm very happy to see this update. Thank you very much!

Having said that, I think it comes across as unfair to the F-Droid project under the section for Signal.

Signal is not being excluded from F-Droid because of the GCM dependency. They want the program there, and the reason it isn't included is that Moxie Marlinspike has firmly requested that it is not to be. See e.g. https://github.com/LibreSignal/LibreSignal/issues/37

I do agree it's very unfortunate though. Of all applications not currently available in F-Droid, Signal is the one I would most like to see there.

Moxie has good reasons to

Moxie has good reasons to oppose both federation and alternate implementations. They both tend to make protocols difficult to update, and Moxie needs to be able to update the Signal protocol with the least possible amount of friction and overhead, since his team is so small. See http://dephekt.net/2016/11/10/managing-security-trade-offs-why-i-still-…

So I think the F-Droid people were wrong to fight so hard for both a fork and federation. I hear Moxie is investigating creating a release of Signal with the Google Play Services dependency removed, so hopefully this will all get resolved soon either way.

Like Moxie himself I would

Like Moxie himself I would love to see him proven wrong about the necessity of centralization for flexible development and deployment here, but unfortunately I agree he probably has an excellent point about that, at least until someone comes up with a way to circumvent it.

As you point out, OpenWhisperSystems have limited resources and are naturally ultimately entitled to decide where to spend them.

I haven't been part of the discussion about forks and alternatives to GCM, and I can't claim to followed it in its entirety, but I have seen enough to dislike the tone of some parts, and I think Moxie has receiving both some requests that OWS has no obligation catering to (though no doubt usually made with the best of intentions) and unfair criticism.

However, I see federation and replacing GCM as largely separate issues from publication on F-Droid.

F-Droid could (and according to my impression would) have published their own build of the standard Signal-Android, and to the best of my understanding the reason they were refused to do so was that Moxie considered F-Droid's security procedures too weak and didn't want to hand over the responsibility in a way that could jeopardize user safety. I don't pretend to know nearly enough to question his judgment in those matters, but looking at the continued discussion the (admittedly reasonable) concerns seem to have been addressed since then. These days OWS could alternatively provide their own version, built and signed with their own process, in their own repository. That would take additional work, certainly.

I'm persistently puzzled about how neither F-Droid maintaining it nor OWS maintaining a version for F-Droid would be acceptable. In my view the practical alternatives for someone who (again justifiably) wants to keep Google influence out of their phone (but would be prepared to accept, at least for now, the "tickle" from GCM for the sake of Signal) are likely to be far less secure, either by trusting entities much harder to verify or by relying on manual updates that are prone to get delayed sooner or later.

On the off chance there was any doubt, I have a great deal of respect for Moxie Marlinspike and the work of OWS and in no way mean to denigrate either.

I am sad to see the current situation in connection with F-Droid though. Once that resolution you mention arrives I welcome it.

Sorry if this carried the topic too far from your actual article, by the way. It strikes me as very impressive work, and that's where the attention belongs in this context.

The security issue that you

The security issue that you mention is that previously, apps built by f-droid.org must have been signed by a key maintained by f-droid.org instead of the developer's key. This is no longer the case, apps can be submitted to f-droid as source only with a link to the developer's own signed binary. f-droid.org will then build the source, and if it matches the APK that the developer submitted, it will be included as a verified reproducible build. The only issue preventing Signal from being included in f-droid.org is that it includes Google's proprietary binary blob library for GCM.

Please forgive my ignorance

Please forgive my ignorance but can't this be solved by linking to GCM dynamically instead of statically and using the simpler, less efficient method (Signal keeps a connection open constantly) when GCM is, for whatever reason, unavailable?

Moxie considered F-Droid's

Moxie considered F-Droid's security procedures too weak and didn't want to hand over the responsibility in a way that could jeopardize user safety.

It not jeopardizes user safety more, than SMS account registration antiprivacy "feature" and Google Play services.

Moxie0 doesn't really think

Moxie0 doesn't really think LibreSignal(deblobbed Signal) is less secure than Signal.

The only possible real reason for someone of his intelligence to make such claims, and to threaten to sue anyone who recompiles his GPL project without taking out all the URLs to central server (which it is useless without) is because he is anti-freedom and anti-liberty, like the NSA.

Yes, but this is not what

Yes, but this is not what the post says. The post implies that F-droid could create a non-free repo to distribute Signal. They can't. Because Moxie doesn't want them to. Signal was at F-droid at some point. So that part of the post is totally inaccurate and unfair for F-droid.

Good point. Moxie originally

Good point. Moxie originally blocked the distribution of a rebuilt Signal with a different key, which I also think was legitimate for him to do.

However, now that Signal is reproducible nothing should be stopping F-Droid from distributing the official Signal with the official key. Nothing is stopping anyone from redistributing the Signal apk in its original form, either.

I updated the post to reflect this.

Whisper Systems could easily

Whisper Systems could easily run an F-Droid repository on their own website, then we could include it in F-Droid like we do the Guardian Project, and users would just need to flip a switch to enable it. They could also use the fdroidserver stack to have a fully reproducible build, which automatically feeds into that repository. This would work with the current source code as it is, with the proprietary Google GCM library in it. I'm happy to help anyone with this process.

Would Google accept a patch

Would Google accept a patch to fix VPN?

It seems silly for each ROM to have to choose between maintaining its own VPN implementation or have a broken VPN that offefs nothing but a false sense of security.

Will NSA allow Google to offer secure VPN? I'm suprised NSA hasn't banned the Rust language, and/or banned HTTPS.

> We provide Google Play

> We provide Google Play primarily because Signal

We can haz pribacee if we comply wit ur controls.

Prob got dem autro-updates XD

E.T. phone home.

Are you by any chance using

Are you by any chance using the Unix "jive" filter? To thwart stylometry? To mock Sheriff Dave Clarke of Milwaukee County?

Orwall is not updating

Orwall is not updating anymore:

"Important announcement

After many thoughts, we have to put a term to orWall dev. The code won't be updated anymore (and hasn't be for ages), and it still has some security flaws as you might see in the opened issues."

https://orwall.org/

Hrmm. It was my

Hrmm. It was my understanding that OrWall maintenance was taken over by Henri Gourvest. But perhaps he stopped, too..

If so, we need a new OrWall maintainer... It would be sad to lose it.

It looks like it may not be

It looks like it may not be as dead as that site exists:

https://twitter.com/orWallApp

"orWall Application @orWallApp Jun 27

Sad we had to announce EOL in order to get some people involved. But hey, project's not dead thanks to @hgourvest! A big thanks to him!"

About Signal: Eutopia's

About Signal: Eutopia's F-Droid repository has a working build of Signal that uses WebSocket instead of GCM, and thus doesn't depend on Google Play Services.

https://fdroid.eutopia.cz/

I don't see that getting

I don't see that getting distributed with any Tor products until it is mainlined, but it's a great step in the right direction! I would definitely like to see Signal switch to an open transport mechanism.

I don't think this build

I don't think this build works anymore, as Moxie asked them to stop distributing up-to-date versions. I used to use it, but it stopped working, so now I use the official Signal build alongside the gapps microG Google Play Services replacement. Maybe there's a new build that works on Eutopia now, but it certainly didn't for a while. Also I found the websockets version to be less reliable for prompt delivery and reception of messages.

Future Work: Baseband

Future Work: Baseband Analysis (and Isolation)

.

.

just for mentioning it, the Neo900 project has exactly this isolation and monitoring (not analysis since that's basically not feasible) as a major design goal, See http://neo900.org/resources and Neo900 Interactive Block Diagram http://neo900.org/stuff/block-diagrams/neo900/neo900.html

/joerg

PS: the SnowdenBunnie

PS: the SnowdenBunnie approach (sleeve) is basically missing the point and probbaly also won't work the way Bunnie hopes for, due to technical reasons how iPhone manages antennas

Make a TrustZone kernel

Make a TrustZone kernel without memory corruption or cipher implementation bugs and you can actually harden apps even on unpatched phones. ARM actually has really good isolation.

Even if this were true,

Even if this were true, unpatched phones are an externality that the end user and the Internet are forced to pay for.

But it's not true. All that has to happen is that the base system becomes compromised, and then there are plenty of microphones, sensors, wifi information, and other side channels to get plenty of valuable and damaging information about the user, and to attack other systems nearby.

Google's mismanagement of the ecosystem is disastrous beyond the point where bandaids like this will fix it. They should focus on competing with their competitors, not hamstringing people working with the base system, or fragmenting the platform. They should go scorched earth on $50 phone manufactures who don't provide updates. Users should be told that if they buy a $50 phone, it may stop working properly in 6 months when it is out of date. There is no other way to stop the race to the bottom.

Another alternative to using Google Play as a source of leverage is to create a new license for AOSP, such that it is a violation of the license to fail to provide updates for devices. That would create a legal avenue for Google and end-users to go after violators.

AN alternative to rumble is

AN alternative to rumble is scuttlebot. It seems to be more actively maintained. Mobile version coming. https://github.com/ssbc/

I don't understand why I

I don't understand why I need Google Play Services or Signal to have a Tor enabled android phone.

Also Copperhead is nice, but it's not FOSS. You shoud check their license.

Replicant is good but also

Replicant is good but also not completely free/libre (it requires proprietary baseband firmware). Replicant and CopperheadOS are the only roms anyone should run, given the choice, which sadly few are. Avoid Verizon at any cost if you value freedom and liberty. Get a Nexus or Pixel if you can.

Replicant itself is

Replicant itself is completely free, but yes, of course the baseband remains a problem. And it isn't particularly up-to-date at the moment, which carries its own risks (though they're making good progress on a version based on a newer android base). There are no ideal options at the moment, unfortunately, but as you say, Replicant do good work here, as do (from the looks of it) Copperhead.

Please DO buy your device

Please DO buy your device from CopperheadOS Store directly. They desperately need the funding (and contributors) to keep the project alive. For basics like servers, devices to port to and survival income for what's been an ongoing FULL-TIME development effort for a couple of years already. This project is too important to die. So do the right thing! At very least, send a considerable donation. It's more than well deserved.

This does seem like quite a

This does seem like quite a task because, as mentioned in the first post, it appears as though the goals of the Android project are almost the exact opposite of the Tor project from a privacy standpoint. I almost think Ubuntu for Phones would be an easier route since it uses a (more-so) mainline kernel, APT, Debian repos, Upstart/systemd, glibc, LXC (for Android drivers), theoretically SELinux, AppArmor, libseccomp2, etc., and many of the other projects we've come to know and have already developed code for on the desktop and server.

Sadly Canonical seems to have abandoned Ubuntu for Android in favor of partnering with OEMs to "sell" the vanilla (no Android drivers) OS preinstalled. While the Meizu Pro 5 is roughly on par with the Nexus 6P (iirc), there aren't many devices to choose from, and practically none if you're in the U.S. I attribute Ubuntu for Phones's failure (if you call it that) mostly to timing -- it came out alongside Firefox OS for Android, Tizen, Windows, and a few other mobile OSes at a time when Android had just reached Apple's market share bracket -- but so it is life.

It's sad to see Google pushing AOSP in the direction it is, but I commend the Tor and Copperhead projects for taking on such a giant task. With Rule 41 on its way fast, goodness knows we need it now more than ever.

Bonus points: is it theoretically possible to rebase Copperhead on Replicant (a blob-free Android distro with Debain-like free software policies) for devices that support it?

> With Rule 41 on its way

> With Rule 41 on its way fast,

There has been some confusion in the press about when the changes will take effect if Congress does not act. The date is Thu 1 Dec 2016, not Sat 31 Dec 2016.

I urge all Debian users to install apt-transport-tor which should help you continue to be able to access unbackdoored software, at least in the short term. A key issue here is that governments including the USG can obtain fake "root certs" enabling them to impersonate any site using https, but onion services can circumvent such state-sponsored MITM.

In the event that the USG declares encryption/tor/open-source illegal in coming months, I hope both Debian Project and Tor Project have a plan to protect critical keyrings, equipment, employees, volunteers and other infrastructure needed to ensure their survival (presumably in nations located far outside the USA).

This will be a time when US users will be required to either follow their core beliefs, even at the cost of "going underground" or attempting to circumvent US laws, or to cave into USG oppression.

Please don't risk your

Please don't risk your computer and/or risk your phone like this.

Set up QubesOS with IOMMU sandbox before connecting computer to phone.

If hou can flash a rom you can install QubesOS. It's really user-friendly and much safer than Debian. Not safe, but less dangerous.

Alas, I cannot flash a

Alas, I cannot flash a ROM.

@ Mike Perry:

Do we have some reason to think your phone will resist FBI hacking come 1 Dec 2016?

DOJ posted on their government blog calling for support for the changes; some Congress persons introduced a bill in the Senate (and the House?) to block the changes in Jul 2017, in order to give the next Congress some time to debate them. There may still be just enough time to stop this nightmare.

A recent article at motherboard.vice.com lists some of the countries where computers have already been attacked by FBI (without "authorization" under US law). An article in techdirt.com (I think) mentioned that FBI sent malware to *every user* or tormail.com, allegedly seeking to identify one person they thought might be using an account there. This indicates that we can expect a blizzard of "legally authorized" phishing of millions of webmail users who use smaller email providers or who have expressed support for BLM or criticism of the Surveillance State.

No, I can't make that

No, I can't make that guarantee. I am only pointing towards a possible future that allows us to retain any computer security in the long term.

In the short term, maybe use iOS. But it is only a matter of time before that is not enough. Here's how this plays out in the long term:

1. iOS becomes the dominant security platform for journalists, activists, dissidents, immigrants, Muslims, crim'nals, terr'ists, and anyone else marked with a colored triangle or two...

2. Some atrocity happens that gives the reactionary elements an excuse to renew their argument for backdoors.

3. The backdoor argument is won in our favor (again), but as a compromise, Apple is compelled to use the iOS app store to deliver targeted backdoors to anyone the police forces deem as undesirable.

4. This mechanism is hijacked by any number of state and non-state adversaries via bribes, exploits, social engineering, and other means, and everyone using the system is vulnerable.

Who knows, #4 may not ever even happen. But I'll bet all my bitcoin #1-3 will, unless something changes really soon. And in this Darkest Timeline, #1-3 should be enough to give us pause.

dont let me dis-heart

dont let me dis-heart you...

sound like you could be trying to sell apple products ?

in the UK new laws being passed as i understand the new technical warrants that could be served could potentially allow to weaken lots of products such as apple.

i wish i know what the answer is to this new legal legislation to get around the new UK draconian laws.

> No, I can't make that

> No, I can't make that guarantee. I am only pointing towards a possible future that allows us to retain any computer security in the long term.

Understood. I believe our situation is now so desperate that we must try desperate measures, e.g. using "beta tests" for real.

> Here's how this plays out in the long term:

>

> 1. iOS becomes the dominant security platform for journalists, activists, dissidents, immigrants, Muslims, crim'nals, terr'ists, and anyone else marked with a colored triangle or two...

If you attend any political rallies, please report any overflying Cessna or other aircraft to ACLU. The FBI spyplanes often fly at very low altitudes (1000-1500 ft above ground level, lower than FAA likes for general aviation except very near an airport). They may circle counterclockwise, possibly in very wide circles so that marchers only see/hear them flying in what appears to be a "straight line", but returning every few minutes. They may also fly in tight circles. The planes may carry 1-3 people. FBI spends at least $300/hr to operate the plane, with salaries for the agents (typically a pilot and an observer, and often a liason with local police) on top of that. Pilots get extra hazard pay for night landings and low level flight near tall structures.

Look for a camera turret often mounted on a mounting arm under the left wing. This turret also contains an infrared laser target designator, as documents obtained by ACLU under FOIA confirm. The camera system can be used to "lock" on individual people in the crowd, and can be used to feed FBI's facial identification system. The laser designator can then be used to indicate to ground crews which person should be followed home, tackled by an arrest team on the ground, or even (worst case) "taken out" by an FBI sniper. This gives new meaning to "tagged", yes?

Not all of the planes carry the cameras, but you may be able to spot the mounting arm. The camera is detachable, so if one plane is in the shop, the agents can remount it on their backup spyplane. There are many pictures on the internet of planes identified as FBI spyplanes by Buzzfeed news reporters in their superb story. After that story appeared, Comey complained that he would have to buy an entirely new fleet (Buzzfeed documented more than 100 FBI spyplanes, not counting planes used by DEA and other federal agencies), and FBI appears to be doing just that. Some of the new planes are single engine planes not made by Cessna.

ACLU suspects that federal spy planes sometimes carry "cell-site simulators", aka IMSI catchers, e.g. Harris Stingray, Kingfish, or comparable gear from DST (NSA's favored purveyor), Cellebrite, or Cobham.

The planes have often been observed monitoring peaceful rallies such as "Islamic Day" or anti-Trump rallies. Sometimes with "comical" results, such as agents failing to observe a shooting several blocks away from the peaceful protest march.

> 2. Some atrocity happens that gives the reactionary elements an excuse to renew their argument for backdoors.

Yes, they already have their Op-Eds and letters to Congress drafted.

NSA and FBI had planned for something like 9/11 well before that happened, and quickly dusted off their plan when the hijackers struck. How nice of Bin Laden to cooperate with their plans.

> 3. The backdoor argument is won in our favor (again), but as a compromise, Apple is compelled to use the iOS app store to deliver targeted backdoors to anyone the police forces deem as undesirable.

Or Tor Project. Unless you all relocate, I fear you will be vulnerable to fascist pressure.

> 4. This mechanism is hijacked by any number of state and non-state adversaries via bribes, exploits, social engineering, and other means, and everyone using the system is vulnerable.

>

> Who knows, #4 may not ever even happen. But I'll bet all my bitcoin #1-3 will, unless something changes really soon. And in this Darkest Timeline, #1-3 should be enough to give us pause.

I have to agree with all that.

Desperate times, desperate times.

In a way, that might work for the resistance, because if enough people have read enough about the rise of the Third Reich to see the clear similarities (history never repeats itself but sometimes it follows a helical path, with the same oppressive and even genocidal ideas coming back with much greater technical sophistication) more people may be willing to take the extraordinary risks which will be required to oppose a vindictive US President who espouses racial/ethnic/religious hatred.

To leave or not to leave? It's an individual decision and I respect that, but I hope key Tor Project employees will seriously consider leaving while you still can.

Just when our situation

Just when our situation seems most hopeless, a welcome tiny but visible beacon of hope shows the way toward restoring the Bill of Rights:

http://arstechnica.com/tech-policy/2016/11/surveillance-firm-slashes-st…

Surveillance firm slashes staff after losing Facebook, Twitter data

ACLU called out Geofeedia for getting social media data and selling it to cops.

[snip article paste]

From ArsTechnica: > During

From ArsTechnica:

> During [US President-elect Donald Trump's post-election] video message—which lasted less than three minutes—Trump touched on a number of areas that are likely to be of interest to Ars readers. However, his only explicit mention of the digital world was of its darker side: "I will ask the Department of Defense, and the chairman of the joint chiefs of staff, to develop a comprehensive plan to protect America's vital infrastructure from cyberattacks."

Sounds like the same Committee Sen. John McCain is calling for, which will seek some way of backdooring everything while somehow reassuring us that no-one could possibly abuse the thing the government doesn't want anyone to call a "backdoor". Yeah, right...

>Do we have some reason to

>Do we have some reason to think your phone will resist FBI hacking come 1 Dec 2016?

That's not really the point here. We all well know here that nothing is hack-proof; the best we can do is make our software hack-resistant, and right now Copperhead seems to be the only option.

From the legislative perspective, it's unclear how much Rule 41 will change in practice: the FBI is already delivering malware to users based on catch-all search warrants authorized by magistrate judges in random jurisdictions. Read "The Playpen Story" blog article by the EFF.

The key part is that currently many judges are throwing away evidence on the grounds that the warrant was invalid. Rule 41 will prevent them from doing this in the future.

It's the old bait and switch: use it to catch child pornographers first and get everyone desensitized to law enforcement hacking or what-have-you, then begin using it on political dissidents and journalists and other "troublemakers."

> Please don't risk your

> Please don't risk your computer and/or risk your phone like this.

"This"? Could you be more specific?

> Please don't risk your

> Please don't risk your computer and/or risk your phone like this.

Risk... "like this"? Like what precisely?

Are you claiming that Peter Palfrader (Debian Project and Tor Project) is mistaken to believe that updating over Tor is safer for at-risk users? (See the announcement some time ago in this blog.)

If so, can you be more specific about the technical risks you perceive?

If it helps, I use Debian mostly off-line, but it's important to update it even though I use Tails as much as possible.

Does anyone know if Shari is

Does anyone know if Shari is in touch with Sen. Wyden (D-OR) about the last ditch effort bipartisan bill he is cosponsoring which would block the changes to Rule 41 until July 2017, in order to give the next Congress the opportunity to debate the changes?

At least a dozen Senators seem to be sponsoring the bill--- see TheHill.com

> With Rule 41 on its way

> With Rule 41 on its way fast, goodness knows we need it now more than ever.

Plus one. Also: a last ditch bipartisan effort to delay the changes (which go into effect Thu 1 Dec 2016 unless Congress acts immediately) has been introduced in the Senate (with a counterpart bill in the House, I believe):

https://www.eff.org/deeplinks/2016/11/give-congress-time-debate-new-gov…

Give Congress Time to Debate New Government Hacking Rule

Kate Tummarello

17 Nov 2016

> If Congress doesn’t act soon, federal investigators will have access to new, sweeping hacking powers due to a rule change set to go into effect on Dec. 1.

>

> That’s why Sens. Chris Coons, Ron Wyden, Mike Lee, and others introduced a bipartisan bill today, the Review the Rule Act, which would push that rule change back to July 1. That would give our elected officials more time to debate whether law enforcement should be able to, with one warrant from one judge, hack into an untold number of computers and devices wherever they’re located.

Thanks for the tip. Looks

Thanks for the tip. Looks like I'll be calling my senators and congressmen yet again. I can't seem to find a bill number for it. Am I missing something?

Senator Wyden's office said

Senator Wyden's office said it doesn't have a Senate bill number yet, but the house version (by Ted Poe, with Wyden et al. as cosponsors) is H.R. 6341. So call your state's federal House representatives' Washington DC offices and tell them you support H.R. 6341 "Review the Rule Act”.

Thank you. Any information

Thank you. Any information about who to call or what H.R. this one is?

Homicide detective says new law would give him more investigatorial power than he would ever want http://thehill.com/blogs/congress-blog/politics/281490-congress-shouldn…

Good stuff, thanks. Any word

Good stuff, thanks.

Any word from Wyden's staffers about the progress of these bills? There is just one week left as I write, but sometimes Congress acts swiftly.

Check the wyden.senate.gov

Check the wyden.senate.gov and Poe.house.gov blogs. You could call their offices and ask, but I don't know if they have any way of actually knowing what kind of progress it's making, or what kind of answer they could give you. Beyond that, Google is your friend. There is news about both bills on plenty of sites, but we won't actually know how much progress we're making until Dec 1st.

Seriously? On this thread

Seriously? On this thread someone would say "Google is your friend." ?! Google is no one's friend. Google's existence depends on creating behavioral profiles of everyone, which will always be vulnerable at some level; if not technically, then legally. Sheesh.

Aw, *#?!$^... Congress has

Aw, *#?!$^...

Congress has left for a five day weekend (as of 23 Nov 2016) without having done anything to move the Review the Rule Act forward.

Staffers won't be back until sometime around 9AM Mon 28 Nov 2016 EST, which gives them less than 48 hours to block the changes to Rule 41.

Seems like every time a baffled citizen assumes the risk of speaking out, saying "let's give the system a chance to do its job", the system does its level best to make us look like simpletons. Thank you very much, Congress.

If you're a patriot then

If you're a patriot then take up arms and execute these fascists who are destroying your country.

Sheriff Dave Clarke of

Sheriff Dave Clarke of Milwaukee county would be thrilled by your words, because in numerous op-eds in major US news media he has claimed that the US is already in a state of "civil war" (his words).

Comparision with the horrors unfolding in Syria show rather starkly that Sheriff Clarke has lost contact with reality.

IMO it's still worth it to

IMO it's still worth it to leave a voicemail. A two minute call could make a difference for years to come.

Vulture central doesn't hold

Vulture central doesn't hold out much hope for stopping Rule 41 with six days to go until FBI sends us all NIT malware, but Iain Thompson states well the essential point for Tor users all over the world:

http://www.theregister.co.uk/2016/11/23/fbi_rule_41/

FYI: The FBI is being awfully evasive about its fresh cyber-spy powers

Agents want to hack suspected Tor, VPN users at will – no big deal

Iain Thomson

23 Nov 2016

> Senior US senators have expressed concern that the FBI is not being clear about how it intends to use its enhanced powers to spy on American citizens. Those are the spying powers granted by Congressional inaction over an update to Rule 41 of the Federal Rules of Criminal Procedure. These changes will kick in on December 1 unless they are somehow stopped, and it's highly unlikely they will be challenged as we slide into the Thanksgiving weekend.

> ...

> This guilt-by-association concept is particularly worrying for Tor users who just like to anonymize their internet use because they don’t feel like handing over their online viewing data to advertisers, or because they might fear persecution. The US government partially funded Tor for this latter group.

FBI sent malware laden phishing emails to *every* tormail.com account. Come 1 Dec 2016, should we expect them to send malware laden phishing emails to *every* account at Riseup, NoLog, Boum, Yahoo, Google...? I fear we should.

The latest release of Orbot

The latest release of Orbot has addressed the start/stop weirdness, and some of the hanging issues. That said, we are working to update the network listener code to the latest APIs available in Android 6/7. When you are building an app that does the unusual things that Tor requires, and it must run on over 10,000+ variations of devices across 10 releases of an operating system, sometimes things don't always work just perfect.

+n8fr8

I appreciate how overworked

I appreciate how overworked you are but please update it on F-droid not just Google Play, because Google Play allows arbitrary remote code execution by design (to anyone at Google, at your ISP, or who guesses your email password and installs malware through web interface).

Thank you for your great work.

Sorry if this is a stupid

Sorry if this is a stupid question but do you mean everyone should use the latest release version (15.1.2 very old, only exists in archives) or latest version released (15.2.0-rc8 presumably a release candidate)? Is there any non-horrible option for Android? Besides "don't use Android"?

While the UI is slightly

While the UI is slightly better, I tried 15.2.0-rc-8 and it still has most of these issues. Try setting your clock to an incorrect time, or connecting while there is no Internet access, or from a WiFi access point that is unpluged from the Internet (aka the Tor network is censored). All of these things will cause the same type of failure on every phone model.

The frequent crashing I can understand may be due to Copperhead's unique memory management, but even that seems a bit odd... It is very frequent.

Is the old, stable one or

Is the old, stable one or the new, release candidate one less likely to have remote code execution bugs?

Mike Perry, you are my hero.

Mike Perry, you are my hero.

Thanks, as always, for pushing the envelope by continuing to work on free software for freedom to enable people to communicate securely.

In this case, I don't

In this case, I don't deserve the bulk of the credit. This phone relies on the work of many, many people. I merely put their work together in a unique way to make some points about free software, privacy, and security, and to raise awareness of the gaps and barriers to meeting those ideals and goals.

Awesome! Thanks for all

Awesome! Thanks for all your hard work, Mike.

The other Mike (Pence) is a determined advocate of warrantless dragnet surveillance (he doesn't believe LEAs should need any warrant to search devices, track all phones, or anything else they might want to do for any reason), and it seems certain the changes to Rule 41 will go through, allowing FBI to attack any computer anywhere in the world for any reason. We know that they have already engaged in such ugly actions as sending malware to *every* tormail address, attacking computers in numerous countries outside the US, etc. FBI rank and file strongly support the fascistic ideas of DJT, Mike Pence, the odious Milwaukee County Sheriff Dave Clarke (who has been mentioned as a possible Attorney General or DHS chief in the Trump admin, and who insists that the US is already in a "civil war" [his words] with pro-democracy movements such as BLM). Former congressman Mike Rogers (a former FBI agent) has also been mentioned as a possible cabinet member in the Trump administration. Other potential picks include openly racist retired generals. This is *not* a "normal transition".

So we need technical defenses for all Tor users, but we also need key Tor Project employees to be safe from FBI harassment or worse. Hope you and other key people are discussing options with Shari.

"pro-democracy movements

"pro-democracy movements such as BLM". Yeah right.

You scoff, but it's not as

You scoff, but it's not as if white society has generally truly cared about democracy. Democracy to white American society means when things are most comfortable for themselves.

Anyone who is not a

Anyone who is not a committed fascist or racist should be a fervent supporter of BLM.

The government is fixing to kill all the dissidents regardless of race. And the alternative waiting to replace to the government is the gangs and the militias, who are also fixing to murder their way unto the extinction of humanity. BLM opposes brutality by police or by gang.

I see BLM as the most important grassroots movement opposed to American fascism.

The experience of black people in America over the past few centuries is a clear indication of what what all non-billionaires can expect to become their lot in Post-America. Progressive enslavement. Unbearable oppression. A choice between a fatal confrontation with the Man or pointless unbearable endless suffering.

How sad that the American experiment which began so bravely in 1775 has ended in such an awful spectacle, while the authoritarians cheer.

Will you take up arms with

Will you take up arms with me Dec 1 if this fascist abuse of legislative loopholes is used to oush through rule 41 changes that change America from barely democratic to out-right authoritarian dictatorship? The processes being used to make this change were intended for minor errata only. The thugs abusing it are traitors to their country and the law calls for their execution.

You may be interested in

You may be interested in this article:

https://theintercept.com/2016/11/18/career-racist-jeff-sessions-is-dona…

[copy of article removed for space.. bytes are precious things, people! use them sparingly - Mike]

As horrible as all that is,

As horrible as all that is, there are countless forums dedicated to such topics, and very few about keeping the Internet safe and secure in the face of totalitarian dictatorships (which the US is turning into and adguably already is).

Only the part about "presidential authority in times of war" really applies to information systems, as important as the rest of your post was. Sorry if this is rude of me, but I'm scared of j-trig operatives derailing infosdc discussions. Ldt's talk about solutions to how these madmen are ruining the Internet and destroying the Bill of Rights (and european equivalents liek the "right to be let alone").

> It is unknown if

> It is unknown if vulnerabilities or backdoors in the baseband can turn on the mic, make silent calls, or access device memory.

One of the most needed consumer items (which FBI/NSA will hate and try to prevent from ever coming to market) is a dongle with audited open source SDR software which can produce a power spectrum in the range of perhaps 50 MHz to 6 GHz. Simply seeing a sudden spike at 2.4 GHz (for example) when the dongle is near your phone could indicate a silent call from the phone.

No doubt it would take much research and experience to begin to understand what is a "normal" kind of power spectrum in US cities, and what various spikes might indicate.

Just to clarify: consumers

Just to clarify: consumers are coming to understand that they have a legitimate need to check up on what the devices they own (to the extent that "ownership" remains a meaningful concept) are doing.

Is your phone making a silent call without your knowledge? You thought you turned off Bluetooth for your computer, but did you really turn it off? You thought you secured your smart thermostat, but what if it is communicating with a neighbor's device without your knowledge? Is someone using a retro-reflector to spy on your consulate office? Has someone hidden a bug in your bedroom which is transmitting audio when someone speaks at 2.4 GHz?

There is an enormous variety of protocols and spectra to consider, and a power spectrum is a crude tool, but in real time, potentially very useful as an indication that some device appears to be doing something strange.

Modern cities are so noisy, especially in wavelengths used by WiFi internet and phones, that the dongle would become much more useful if you could also buy a Faraday cage and put both the suspect device and your laptop with dongle inside, to try to ensure that the suspect device really is responsible for a suspicious emission of RF energy.

@ Mike, Peter: please pressure Debian to upgrade the initrd bug. I believe this is much more serious for journalists, bloggers, human rights workers than they have yet recognized. It should be patched in stable.

The advantage of a crude

The advantage of a crude spectrum analyzer over something smart enough to analyze signals and tell you if there's anything sketchy going on is that ghe former can be implemented with simple analog parts, with nothing re-programmable, and of course can be cheaper and easier for someone more skilled than myself to publish a guide on how to make your own with parts from Radioshack.

Making it directional can eliminate false positives from a noisy environment. If you put a compromised laptop in a faraday bag the malware in it may detect this and uninstall itself, and get reinstalled soon after with whatever 0day it used before. The NSA/PLA/other-nation-state-APT's are easily advanced enough to do this.

> The advantage of a crude

> The advantage of a crude spectrum analyzer over something smart enough to analyze signals and tell you if there's anything sketchy going on is that ghe former can be implemented with simple analog parts, with nothing re-programmable, and of course can be cheaper and easier for someone more skilled than myself to publish a guide on how to make your own with parts from Radioshack.

>

> Making it directional can eliminate false positives from a noisy environment.

Agree with all that, except that Radioshack is bankrupt and may not survive even after restructuring.

> If you put a compromised laptop in a faraday bag the malware in it may detect this and uninstall itself, and get reinstalled soon after with whatever 0day it used before. The NSA/PLA/other-nation-state-APT's are easily advanced enough to do this.

Yes, but we need to balance that with the fact that they have problems of their own. Their resources (money and especially technical talent) are not literally unlimited, they have to prioritize, and when they are asked for help by another agency, ancient rivalries can continue to affect whether they choose to help another agency attack citizens the other agency desires to target.

Researching the RF environment in US cities is one of the most important things US security researchers have by and large *not* been doing. This is very dangerous because the government (not just federal, but even municipal) are doing serious "research" of their own in this area.

Readers of a certain age may recall a cliche of World War II movies, in which we see a Gestapo radio direction finding vehicle pass by the Parisian house where OSS agent Gregory Peck is using his clandestine radio to contact the Free French. Suddenly Gestapo agents burst in spraying machine fire. Finito poor Peck.

Trump advisors such as Sheriff Dave Clarke of Milwaukee County very much want to bring that kind of action to US cities in the year 2017. Don't believe it because *I* say so. Believe it because *he* says so. Check out his numerous Op-Eds declaring that the US is already experiencing a "civil war". His words, not mine.

> @ Mike, Peter: please

> @ Mike, Peter: please pressure Debian to upgrade the initrd bug. I believe this is much more serious for journalists, bloggers, human rights workers than they have yet recognized. It should be patched in stable.

I've tested it and the backdoor works just like the researchers claimed. When your LUKS encrypted device asks for the LUKS passphrase, simply repeatedly hit enter. After about 100 attempts, you will see the initrd shell, allowing you to replace the kernel or firmware with your own version or to plant a keylogger.

In Oct 2015 an unknown actor (even the university PD appeared to admit that the leading suspect is some current or former CIA officer, but the cops declined to question the CIA officer who is stationed on campus) picked the lock to the office of a human rights researcher with Center for Human Rights (University of Washington), which is suing the CIA for rampant human rights abuses during the "Dirty Wars" in Latin America. The intruder took the hard drive containing her legal case notes and left, relocking the office door. This MO is obviously completely unlike a criminal MO.

The same presumed current or former CIA agent could easily have spent a few extra moments exploiting the initrd bug, so that the next time the legit user booted her PC, her LUKS passphrase would be snagged, allowing easy untraceable access on repeat visits by the intruder.

thestranger.com

Two Weeks After It Sued the CIA, Data Is Stolen from the University of Washington's Center for Human Rights

Ansel Herz

21 Oct 2015

seattlepi.com

Break-in reported at UW center fighting CIA on El Salvador

Police investigating after professor’s computer goes missing

Levi Pulkkinen

21 Oct 2015

> One of the most needed

> One of the most needed consumer items (which FBI/NSA will hate and try to prevent from ever coming to market) is a dongle with audited open source SDR software which can produce a power spectrum in the range of perhaps 50 MHz to 6 GHz. Simply seeing a sudden spike at 2.4 GHz (for example) when the dongle is near your phone could indicate a silent call from the phone.

"Professional grade" spectrum analyzers are already widely used by companies and local, provincial and national governments.