New Foundations for Tor Network Experimentation

Hello, Tor World!

Justin Tracey, Ian Goldberg, and I (Rob Jansen) recently published some work that makes it easier to run Tor network experiments under simulation and helps us do a better job of quantifying confidence in simulation results. This post offers some background and a high-level summary of our scientific publication:

Once is Never Enough: Foundations for Sound Statistical Inference in Tor Network Experimentation

30th USENIX Security Symposium (Sec 2021)

Rob Jansen, Justin Tracey, and Ian Goldberg

The research article, video presentation, and slides are available online, and we've also published our research artifacts.

If you don't want to read the entire post (which provides more background and context), here are the main points that we hope you will take away from our work:

Better Models and Tools:

- Contribution: We improved modeling and simulation tools to produce Tor test networks that are more representative of the live Tor network and that we can simulate faster and at larger scales than were previously possible.

- Outcome: We achieved a signficant new milestone: we ran simulations with 6,489 relays and 792k simultaneously active users, the largest known Tor network simulations and the first at a network scale of 100%.

New Statistical Methodologies:

- Contribution: We established a new methodology for employing statistical inference to quantify test network sampling error and make more useful predictions from test networks.

- Outcomes: We find that (1) running multiple simulations in independently sampled Tor test networks is necessary to draw statistically significant conclusions, and (2) larger-scale test networks require fewer repeated trials than smaller-scale test networks to reach the same level of confidence in the results.

More details are below!

Background: Tor Network Experiments

Network experimentation is of vital importance to the Tor Project's research, development, and deployment processes. Experiments help us understand and estimate the viability of new research ideas, to test out newly written code, and to measure the real world effects of new features. Measurements taken during experiments help us gain confidence that Tor is working how we expect it should be.

Experiments are often run directly on the live, public Tor network---the one to which we all connect when we use Tor Browser. Live network experiments are possible when production-ready code is available and deployed through standard Tor software updates, or when code needs to be deployed on only a small number of nodes. Live network experiments allow us to gather, analyze, and assess information that is most relevant the target, real-world network environment. (We maintain a list of ongoing and recent live network experiments from those who notify us.)

However, live network experiments carry additional, sometimes significant risk to the safety and privacy of Tor users and should be avoided whenever possible. As outlined by our Research Safety Board, we should use a private, test Tor network to conduct experiments whenever possible. Test networks such as those that are run in the Shadow network simulator are completely private and segregated from the Internet, providing an environment in which we can run Tor experiments with absolutely no safety or privacy risks. Test networks should be our only choice when evaluating attacks or other experiments that are otherwise unethical to run.

Private test networks have many important advantages in addition to the safety and privacy benefits they offer:

Test networks can help us more quickly test and debug new code during the development process. Even the best programmers in the world can occasionally introduce a bug that is not covered by more conventional unit or integration testing. Running a larger and more diverse test network can help us exercise complex corner cases and improve test coverage.

Test networks allow us to immediately deploy new code across the entire private network of Tor relays and clients without having to wait for lengthy deployment cycles. (If you run a Tor relay, thank you! and please keep it up to date.) Immediate deployment in test networks not only helps us tighten the development cycle and tune parameters, but also increases our confidence that things will work as expected when the code is deployed to the live network.

Test networks allow the community to more quickly design and evaluate novel research ideas (e.g., a performance enhancing algorithm or protocol) using prototypes without committing the time and effort that would be required to produce production-quality code. This allows us to more quickly learn about and identify design changes that are worth the additional development, deployment, and maintenance costs.

More Realistic Tor Test Networks

Modeling

We want to ensure that Tor test networks that operate independently of the live Tor network still produce results that are relevant to the real world. The first Tor test network models were published about 10 years ago using network data published by Tor metrics, and our methodology has continued to improve over the years thanks to new privacy-preserving measurement systems (PrivEx and PrivCount) and new privacy-preserving measurement studies of Tor network composition and background traffic. These works have enabled us to create private Tor test networks whose characteristics are increasingly similar to those of the live Tor network.

We further advance Tor network modeling in our work. We designed a new network modeling approach that (1) can synthesize the state of the Tor network over time (rather than modeling a static point in time), and (2) can use a small number of background traffic generator processes to accurately simulate the traffic from a much large number of Tor users (reducing the computing resources required to run an experiment). With these changes, we can now produce Tor test networks that are more representative than those used in previous work.

Performance

In the live Tor network, thousands of relays forward hundreds of Gbit/s of traffic from hundreds of thousands of users at an average point in time. Accurately reproducing the associated traffic properties in a test network requires a significant amount of computing resources. As a result, it has become standard practice for researchers to down-sample relays and create a smaller-scale Tor network that could be run with fewer computing resources. However, as we'll show in the next section, we find that smaller-scale test networks are less representative of the live Tor network and we have significantly less confidence in the results they produce. Therefore, it is beneficial to have more efficient tools that use fewer resources to run a simulation and that allow us to run larger-scale simulations.

After conducting a performance audit of Shadow, the tool we use to run Tor test network experiments, we implemented several accuracy and performance improvements that were merged into Shadow v1.13.2. Our improvements enable us to run Tor simulations faster and at larger scales than were previously possible. With our modeling and performance improvements, we achieved a signficant new milestone: we ran simulations with 6,489 relays and 792k simultaneously active users, the largest known Tor network simulations and the first at a network scale of 100%. (Please note that these experiments required a machine with ~4TB of RAM to complete, but we think ongoing work could reduce this by a 10x factor.)

Improving Our Confidence in Test Network Results

A critical but understudied component of Tor network modeling is how the scale of the test network affects our confidence in the results it produces. Due in part to performance and resource limitations, researchers have usually run a single experimental trial in a scaled-down Tor test network. Because test networks are sampled using data from the live Tor network, there is an associated sampling error that must be quantified when making predictions about how the effects observed in sampled Tor networks generalize to the live Tor network. However, the standard practice was to ignore this sampling error.

In our work, we establish a new methodology for employing statistical inference to quantify the sampling error and to guide us toward making more useful predictions from sampled test network. Our methodology employs repeated sampling and confidence intervals (CIs) to establish the precision of estimations that are made across sampled networks. CIs are a statistical tool to help us do better science; they allow us to make a statistical argument about the extent to which the simulation results are (or are not) relevant to the real world. In particular, CIs help guide us to sample additional Tor networks (and run additional experiment trials) if additional precision is necessary to confirm or reject a research hypothesis.

We conducted a case study on Tor usage and performance to demonstrate how to apply our methodology to a concrete set of experiments. We considered whether adding 20% of additional load to the Tor network would reduce performance--we certainly expect that it should!

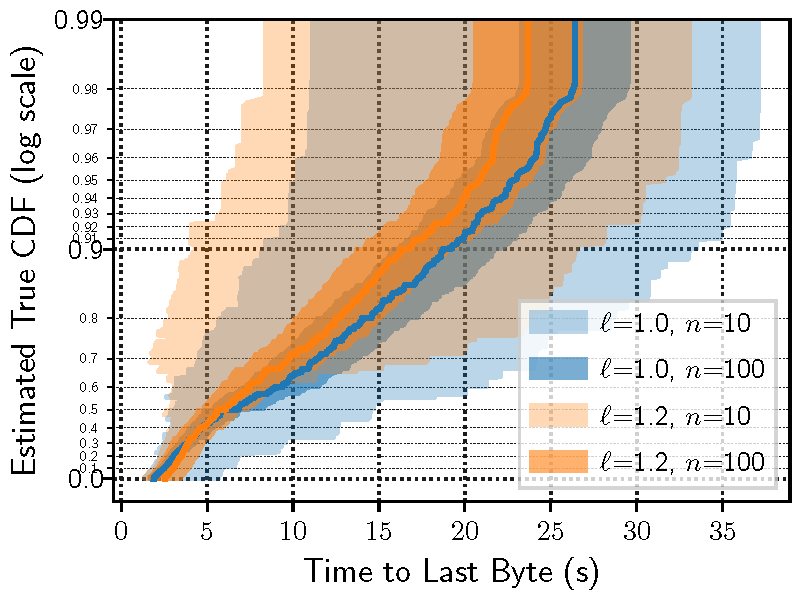

Figure 7a plots the results of applying our statistical inference methodology to 1% scaled-down test networks in which we ran n={10,100} trials with network loads of ℓ={1.0,1.2} times the normal load. We can see that there is considerable overlap in the CIs, even when running n=100 repeated trials. In fact, this graph indicates that adding 20% additional load to the network reduces the time to download files, i.e., makes the network faster--the opposite of the outcome that we expected!

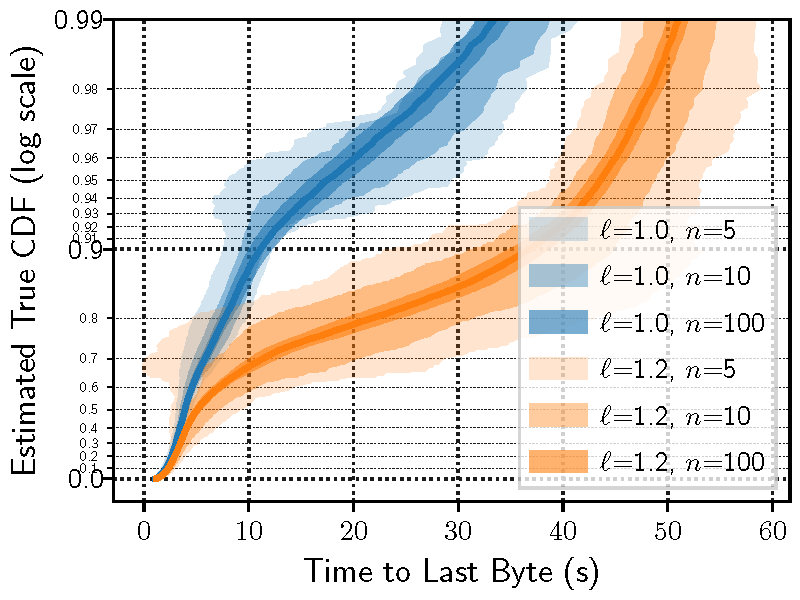

Figure 7b plots the results of applying our statistical inference methodology to much larger 10% scaled-down test networks in which we ran n={5,10,100} trials with network loads of ℓ={1.0,1.2} times the standard load. Here we see that when running only n=5 trials, there is some separation between the CIs but still some overlap in the lower 80% of the distribution. However, running more trials produces more precise (narrower) CIs that increase our confidence in our hypothesis that adding 20% additional load to the network does in fact increase the time to download files.

We conclude from our case study on Tor usage and performance that (1) running multiple simulations in independently sampled Tor test networks is necessary to draw statistically significant conclusions, and (2) that fewer simulations are generally needed to achieve a desired CI precision in test networks of larger scale than in those of smaller scale.

An important takeaway is that our work demonstrates a methodology that those of us running Tor experiments in test networks can now follow in order to (1) estimate the extent to which our experimental results are scientifically meaningful, and (2) guide us toward producing more statistically rigorous conclusions.

Availability

Our methods and tools have been contributed to the open source community. If you're interested in taking advantage of our work, a good place to start is by first setting up Shadow, and then tornettools will help guide you through the process of creating Tor test networks, running simulations, and processing results. You can ask questions on Shadow's discussion page.

Practical Applications of Our Work

The Shadow team has adopted our tools as part of their automated, continuous integration tests which now include testing in private Tor test networks.

The core Tor network team has been building upon our contributions as they develop, test, and tune a new set of congestion control protocols that will begin to roll out in the coming months. Our work has helped them more rapidly prepare test network environments and more thoroughly explore the design space while tuning parameters. For more information, see the GitLab tracking issue, the congestion control proposal, and the Shadow congestion control experiment plan.

Thanks for reading!

All the best, ~Rob

[Thanks to Ian Goldberg and Justin Tracey for input on this post!]

Comments

We encourage respectful, on-topic comments. Comments that violate our Code of Conduct will be deleted. Off-topic comments may be deleted at the discretion of the moderators. Please do not comment as a way to receive support or to report bugs on a post unrelated to a release. If you are looking for support, please see our FAQ, user support forum or ways to get in touch with us.